The public cloud (i.e. AWS, Azure, etc.) is often portrayed as a panacea for all that ails on-premises solutions. And along with this “cure-all” impression are a few misconceptions about the benefits of using the public cloud.

One common misconception pertains to autoscaling, the ability to automatically scale up or down the number of compute resources being allocated to an application based on its needs at any given time. While Azure makes autoscaling much easier in certain configurations, parts of Azure don’t as easily support autoscaling.

For example, if you look at the different application service plans, you will see the lower three tiers (Free, Shared and Basic) do not include support for auto-scaling like the top 4 tiers (Standard and above). And there are ways to design and architect your solution to make use of auto-scaling. The point being, just because your application is running in Azure does not necessarily mean you automatically get autoscaling.

Scale out or scale in

In Azure, you can scale up vertically by changing the size of a VM, but the more popular way Azure scales is to scale-out horizontally by adding more instances. Azure provides horizontal autoscaling via numerous technologies. For example, Azure Cloud Services, the legacy technology, provides autoscaling automatically at the role level. Azure Service Fabric and virtual machines implement autoscaling via virtual machine scale sets. And, as mentioned, Azure App Service has built in autoscaling for certain tiers.

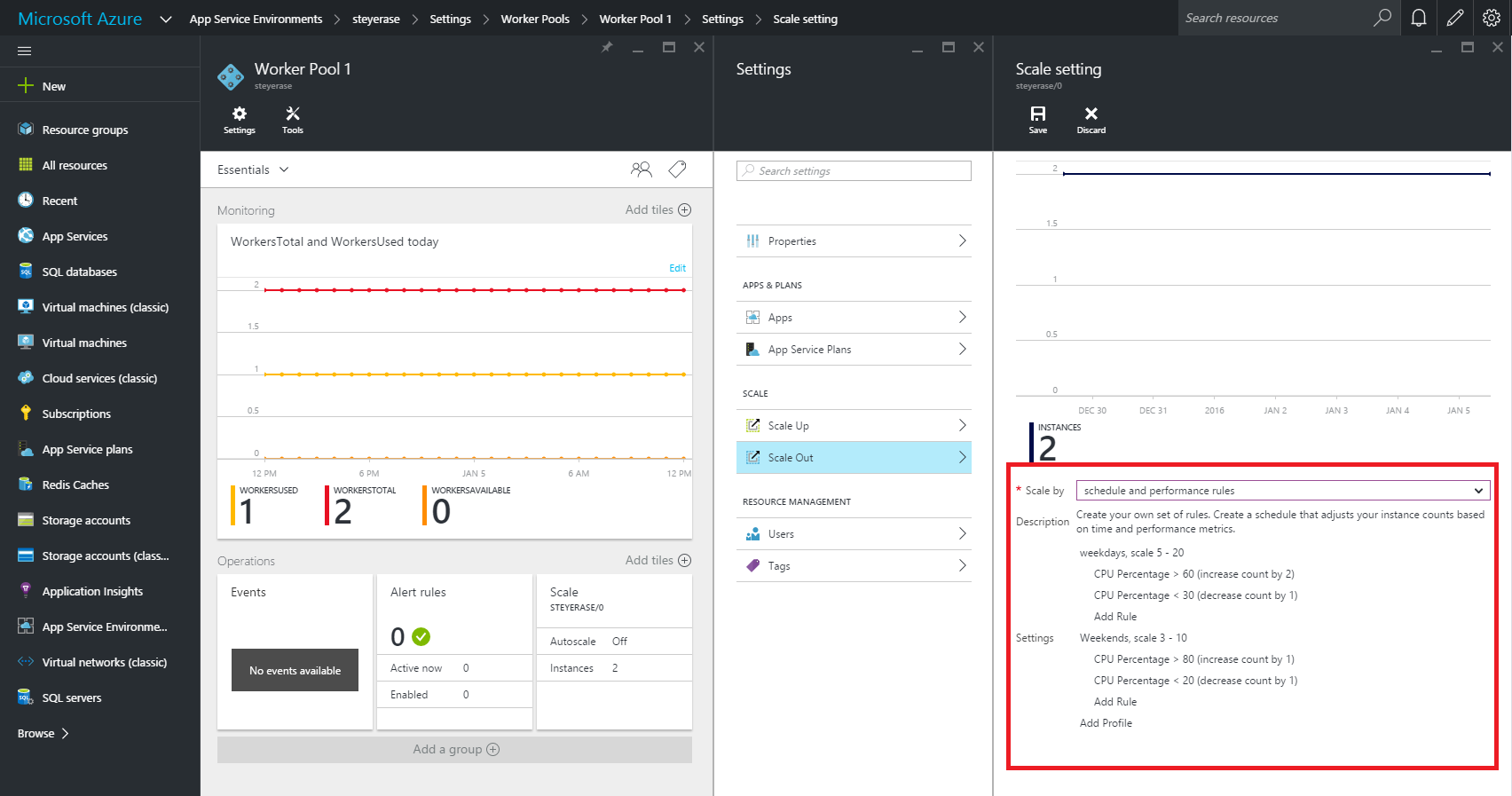

When it is known ahead of time that a certain date or time period (such as Black Friday) will warrant the need for scaling-out horizontally to meet anticipated peak demands, you can create a static scheduled scaling. This is not in the true sense “auto” scaling. Rather, the ability to dynamically and reactively auto-scale is typically based upon runtime metrics that reflect a sudden increase in demand. Monitoring metrics with compensatory instance adjustment actions when a metric reaches a certain value is a traditional way to dynamically auto-scale.

Tools for autoscaling

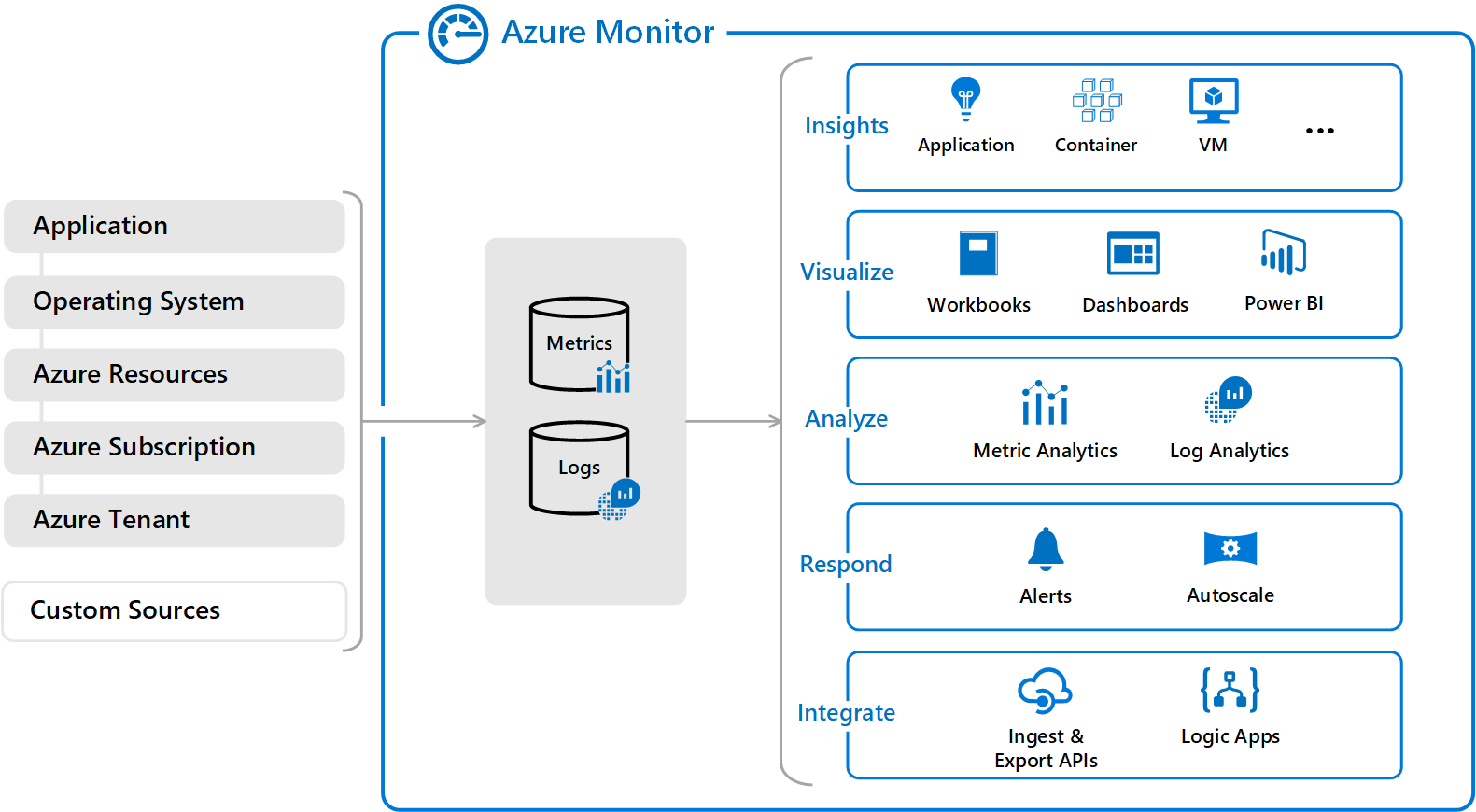

Azure Monitor provides that metric monitoring with auto-scale capabilities. Azure Cloud Services, VMs, Service Fabric, and VM scale sets can all leverage Azure Monitor to trigger and manage auto-scaling needs via rules. Typically, these scaling rules are based on related memory, disk and CPU-based metrics.

For applications that require custom autoscaling, it can be done using metrics from Application Insights. When you create an Azure application and you want to scale it, you should make sure to enable App Insights for proper scaling. You can create a customer metric in code and then set up an autoscale rule using that custom metric via metric source of Application Insights in the portal.

Design considerations for autoscaling

When writing an application that you know will be auto-scaled at some point, there are a few base implementation concepts you might want to consider:

- Use durable storage to store your shared data across instances. That way any instance can access the storage location and you don’t have instance affinity to a storage entity.

- Seek to use only stateless services. That way you don’t have to make any assumptions on which service instance will access data or handle a message.

- Realize that different parts of the system have different scaling requirements (which is one of the main motivators behind microservices). You should separate them into smaller discrete and independent units so they can be scaled independently.

- Avoid any operations or tasks that are long-running. This can be facilitated by decomposing a long-running task into a group of smaller units that can be scaled as needed. You can use what’s called a Pipes and Filters pattern to convert a complex process into units that can be scaled independently.

Scaling/throttling considerations

Autoscaling can be used to keep the provisioned resources matched to user needs at any given time. But while autoscaling can trigger the provisioning of additional resources as needs dictate, this provisioning isn’t immediate. If demand unexpectedly increases quickly, there can be a window where there’s a resource deficit because they cannot be provisioned fast enough.

An alternative strategy to auto-scaling is to allow applications to use resources only up to a limit and then “throttle” them when this limit is reached. Throttling may need to occur when scaling up or down since that’s the period when resources are being allocated (scale up) and released (scale down).

The system should monitor how it’s using resources so that, when usage exceeds the threshold, it can throttle requests from one or more users. This will enable the system to continue functioning and meet any service level agreements (SLAs). You need to consider throttling and scaling together when figuring out your auto-scaling architecture.

Singleton instances

Of course, auto-scaling won’t do you much good if the problem you are trying to address stems from the fact that your application is based on a single cloud instance. Since there is only one shared instance, a traditional singleton object goes against the positives of the multi-instance high scalability approach of the cloud. Every client uses that same single shared instance and a bottleneck will typically occur. Scalability is thus not good in this case so try to avoid a traditional singleton instance if possible.

But if you do need to have a singleton object, instead create a stateful object using Service Fabric with its state shared across all the different instances. A singleton object is defined by its single state. So, we can have many instances of the object sharing state between them. Service Fabric maintains the state automatically, so we don’t have to worry about it.

The Service Fabric object type to create is either a stateless web service or a worker service. This works like a worker role in an Azure Cloud Service.