Many companies are eager to use artificial intelligence (AI) in production, but struggle to achieve real value from the technology.

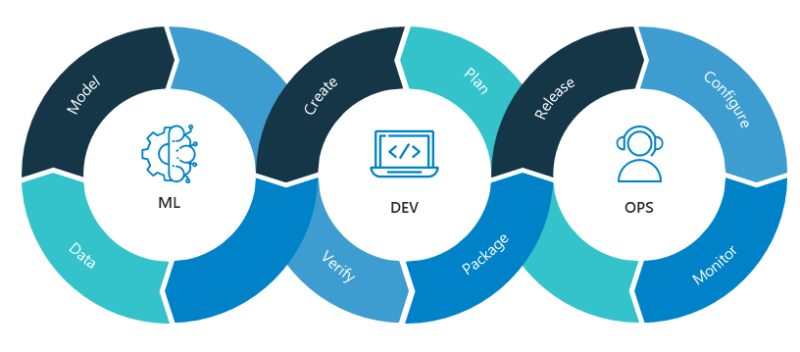

What’s the key to success? Creating new services that learn from data and can scale across the enterprise involves three domains: software development, machine learning (ML) and, of course, data. These three domains must be balanced and integrated together into a seamless development process.

Most companies have focused on building machine learning muscle – hiring data scientists to create and apply algorithms capable of extracting insights from data. This makes sense, but it’s a rather limited approach. Think of it this way: They’ve built up the spectacular biceps but haven’t paid as much attention to the underlying connective tissues that support the muscle.

Why the disconnect?

Focusing mostly on ML algorithms won’t drive strong AI solutions. It might be good for getting one-off insights, but it isn’t enough to create a foundation for AI apps that consistently generate ongoing insights leading to new ideas for products and services.

AI services have to be integrated into a production environment without risking deterioration in performance. Unfortunately, performance can decline without proper data management, as ML models will degrade quickly unless they’re repeatedly trained with new data (either time-based or event-triggered).

Professionalizing the AI development process

The best approach to getting real and continuous value from AI applications is to professionalize AI development. This approach conforms to machine learning operations (MLOps), a method that integrates the three domains behind AI apps in such a way that solutions can be quickly, easily and intelligently moved from prototype to production.

AI professionalization elevates the role of data scientists and strengthens their development methods. Like all scientists, these professionals bring with them a keen appreciation for experimentation. But often, their dependence on static data for creating machine learning algorithms –which they developed on local laptops using preferred tools and libraries – impedes production AI solutions from continuously producing value. Data communication and library dependency problems will take their toll.

Data scientists can continue to use the tools and methods they prefer, their output accommodated by loosely coupled DevOps and DataOps interfaces. Their ML algorithm development work becomes the centerpiece of a highly professional factory system, so to speak.

Smooth pilot-to-production workflow

Pilot AI solutions become stable production apps in short order. We use DevOps technology and techniques such as continuous integration and continuous delivery (CICD) and have standard templates for automatically deploying model pipelines into production. By using model pipelines, training and evaluation can happen automatically if needed – when new data arrives, for instance – without human involvement.

Our versioning and tracking ensure that everything can be reused, reproduced and compared if necessary. Our advanced monitoring provides end-to-end transparency into production AI use cases (including data and model pipelines, data quality and model quality and model usage).

Using our innovative MLOps approach, we were able to bring the pilot-to-production timeline for one U.S. company’s AI app down from six months to less than one week. For a UK company, the window for delivering a stable AI production app shrank from five weeks to one day.

The transparency of AI solutions, and confidence in their agility and stability, is critical. After all, the value lies in the ability to use AI to discover new business models and market opportunities, deliver industry-disrupting products and creatively respond to customer needs.