If there is one thing that 2020 has taught us, it is that things can change on a dime. Over the last year, we have learned how to better cope with dramatic change in how we run our businesses – setting up remote working, creating more online services to satisfy customers’ new demands and migrating more applications to the cloud. But there’s more to do.

In these times, businesses are demanding even more agility and flexibility from their internal IT departments, which already have been under pressure to modernize data-center operations as the popularity of SaaS and the public cloud grows.

The trend toward data center virtualization is sure to intensify. In the current environment, we may need to reconsider how we think about and transition to the software-defined data center (SDDC). It’s increasingly important to have solid and standardized protocols and processes for SDDC to improve your company’s dexterity, scalability and costs. SDDC’s value also lies in its ability to improve resiliency, helping IT more seamlessly provision, operate and manage data centers through APIs in the midst of crisis.

A well-groomed SDDC architecture primes an organization for its transformation journey to hybrid cloud. We won’t say that that journey is inevitable for everyone, but it’s way more likely than not.

Evidence of a growing movement to hybrid cloud comes from a recent Everest Group survey of 200 enterprises, which found that three out of four respondents said they have a hybrid-first or private-first cloud strategy, and 58% of enterprise workloads are on or expected to be on hybrid or private clouds. As much as companies may like the idea of moving everything to public cloud for its flexibility and cost benefits, it just isn’t practical for many reasons, including compliance and security concerns.

As a virtualized pool of resources, SDDC is the optimal foundation for hybrid cloud environments. It provides a common platform for both private and public clouds, automating resource assignments and tasks, simplifying and speeding application deployment, and being the backbone of a high-availability infrastructure. Operational and IT labor costs shrink.

Make SDDC work for you

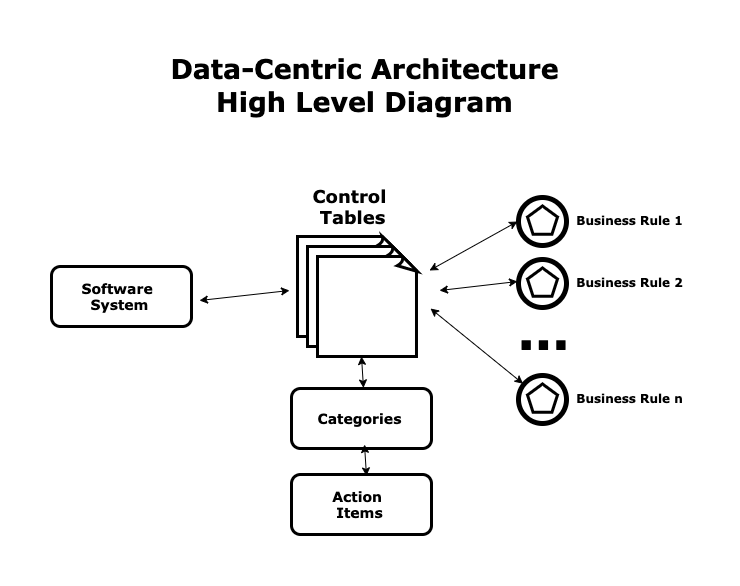

If you’ve already invested in SDDC software but aren’t seeing the returns you’d hoped for, you’re in the same boat as many other businesses. Companies often start on the road to virtualizing and automating their compute, storage and networking infrastructure, but they haven’t changed their thinking about how to operate the environment by reorganizing management functions with a code-based mindset.

It’s time to think differently.

The transition is not unlike what took place as DevOps software development practices overtook waterfall development, creating an environment where DevOps engineers came together with developers and IT operational staff to facilitate the creation of and regular release updates for products.

To get the most value from SDDC, you must merge the traditional functions of architecture, engineering, integration and operations teams into a DevOps kind of model to enhance the feedback loop and make improvements in the architecture/design.

Constant feedback loops need to be institutionalized. Adopting the SDDC infrastructure-as-code approach creates the continuous delivery pipelines for business applications that are critical to business competitiveness. Remember: If you can’t roll out solutions to answer customers’ needs at the speed of thought, you’re at risk of losing business to a rival that can.

Management silos need to break down and new tooling and processes must be standardized. There is no longer a need to invest in developing vendor-specific hardware operations skills. A culture shift is required for siloed network, storage and compute teams. There’s no room for managing discrete environments if your business is to achieve a complete, automated and cloud-ready SDDC. Integrated teams must be aligned to a single goal.

SDDC environments deliver other important benefits. Organizations with different IT environments in different regions suffer from a lack of consistency in hardware-oriented data center infrastructures. Replacing the confines and confusion of this setup with a hardware-agnostic approach using intelligent software streamlines the process of moving workloads across resources for better disaster recovery, business continuity and scalability.

SDDC matures for the digital era

Many considerations must go into the build-out of an SDDC. Businesses will find that the solutions and services available with Anteelo’s Enterprise Technology Stack set the groundwork for developing and refining SDDC capabilities.

It starts with our understanding and management of even the most complex customer environments, where we can apply our knowledge to help businesses understand the transformation journey. We can manage and maintain your SDDC, assisting you with everything from advising you about what applications are appropriate to live in the cloud to maintaining tight security controls.

Success in our digital era demands less complicated and more easily managed data centers. SDDC is the mature and sophisticated answer to that need.