Many system integrators and cloud hosting providers claim to have their own unique process for cloud migrations. The reality is everyone’s migration process is just about the same, but that doesn’t mean they’re all created equal. What really matters is in the details of how the work gets done. Application transformation and migration requires skilled people, an understanding of your business and applications, deep experience in performing migrations, automated processes and specialized tools. Each of these capabilities can directly impact costs, timelines and ultimately the success of the migrations. Here are four ways to help ensure a fast and uneventful move:

Many system integrators and cloud hosting providers claim to have their own unique process for cloud migrations. The reality is everyone’s migration process is just about the same, but that doesn’t mean they’re all created equal. What really matters is in the details of how the work gets done. Application transformation and migration requires skilled people, an understanding of your business and applications, deep experience in performing migrations, automated processes and specialized tools. Each of these capabilities can directly impact costs, timelines and ultimately the success of the migrations. Here are four ways to help ensure a fast and uneventful move:

1: Create a solid readiness roadmap.

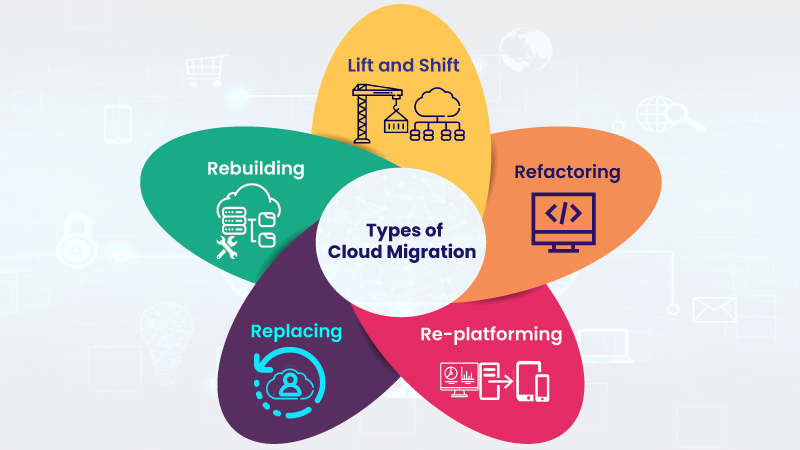

The cloud readiness stage is often referred to as the business case and/or involve a total cost of ownership assessment, rapid discovery, advisory services, and more. However, most of these approaches fall short of the up-front analysis really needed to decide which applications to move, refactor or replace. When you embark on a migration to cloud, job one is to ensure you are ready to go. Diving right into a migration without a roadmap is typically a recipe for failure. If you don’t know what the business case is to migrate, then stop right there. You should come out of the readiness phase with a high-level plan and an initial set of migration sprints and a detailed roadmap on how to address the subsequent sprints.

2: Design a detailed migration plan.

This phase, also referred to as deep discovery, will help keep migration activities on schedule. All too often, lack of proper analysis and design leads to surprises in the migration phase that can have a domino effect across multiple applications. This phase should include a detailed analysis of the scope of applications and workloads to be migrated, sprint by sprint. This agile process shortens migration time and ensures faster time to value in the cloud. All unknowns are uncovered, and changes are incorporated in the roadmap to ensure anticipated business objectives will be achieved.

3: Set a strict cadence — and stick to it.

Often, most of the planning effort focuses on getting past that first migration sprint. This phase requires a great deal of planning and preparation across multiple stakeholders to keep the project on time and within budget. Scheduling all of the resources and tracking the tasks that lead up to D-day is no simple job. These activities must follow a standard cadence of planning steps to ensure effective and efficient migrations.

4: Automate as much of the migration as possible.

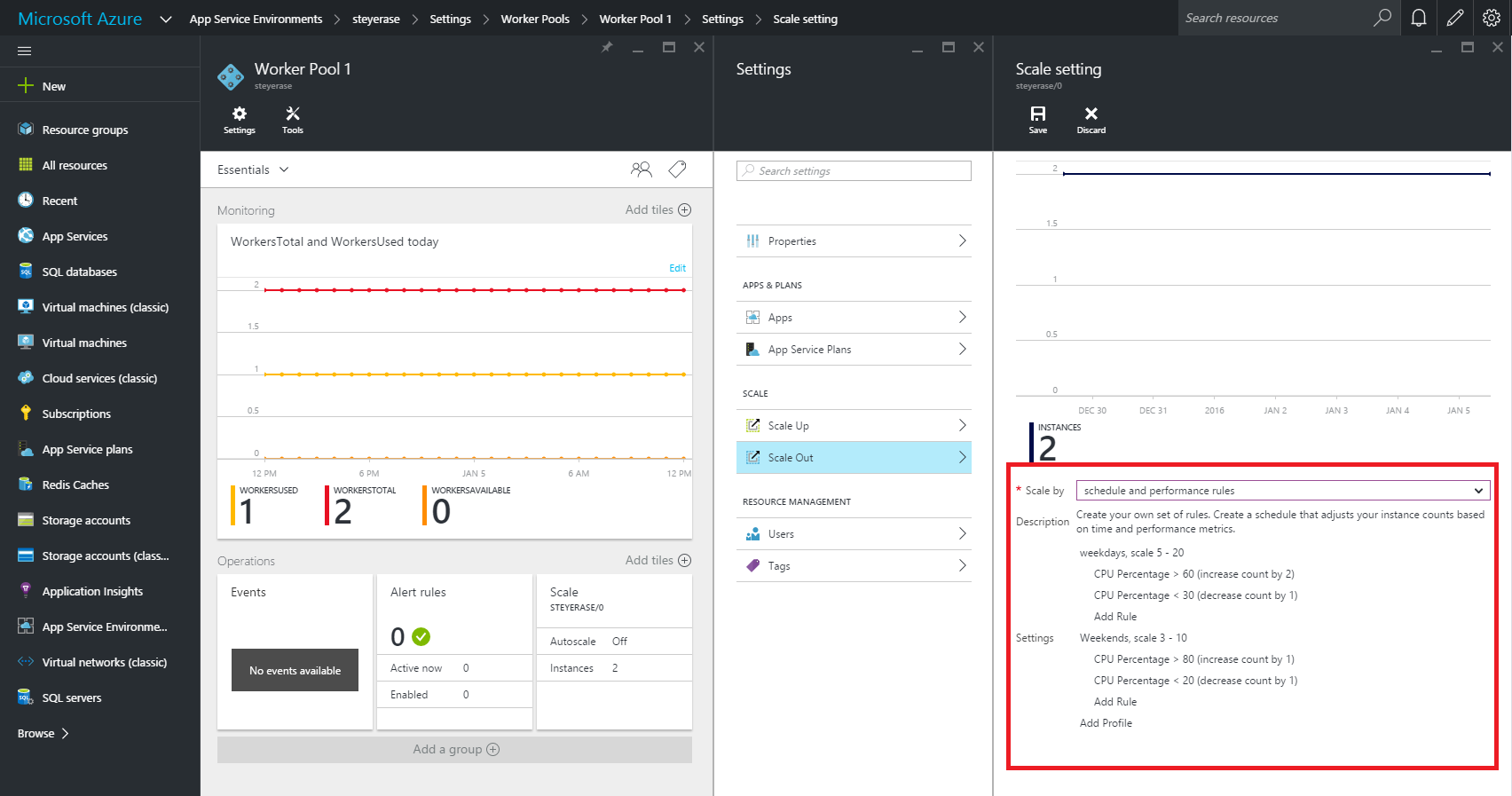

The way your organization performs the migration can also impact the project. In most cases, tools that automate the application transformation process, as well as the migration to cloud, can mean a huge savings in time and money. Unfortunately, there’s no off-the-shelf automation tool to ensure success. The migration phase requires people experienced in a range of tools and how to orchestrate the work.

One could argue that a fifth way to ease migration headaches is optimizing applications during the process. However, there are different schools of thought on whether to optimize applications and workloads before, during or after migration. I can’t say there is a definitive right or wrong answer, but most organizations prefer to optimize after migration. This allows for continuous tuning and optimization of the application moving forward.

It is important to note that steps in the migration process are not one-and-done activities. The best migration and transformation services are iterative and continuous.

You can’t determine readiness only once. The readiness plan needs to be reviewed on an ongoing basis. Don’t analyze and design migrations just once. Do it sprint by sprint, and plan each migration activity. Make sure you continuously collect and refresh data, refine and prioritize the backlog, and look for key lessons learned to ensure continuous improvement through the lifecycle of entire migration. Experience and planning can go a long way toward easing your next application migration.