![]()

DevOps is the fusion of social thinking approach, practices, and apparatuses that builds an association’s capacity to deliver products and service at high pace: developing and adapting products at a quicker speed than businesses utilising customary software development and infrastructure management procedures. This speed empowers companies to give their customers comprehensive services and stay ahead of their contemporaries. DevOps is the posterity of agile software development – conceived from the need to stay inline with augmented programming speed, and throughput agile strategies have accomplished. Development in agile culture and approaches over the past few years revealed the requirement for a more universal approach for the end-to-end software delivery lifecycle.

Who is a DevOps Engineer?

DevOps Engineer is a professional who comprehends the Software Development Lifecycle and has the inside and out knowledge of different automation technologies for creating advanced pipelines (like CI/CD). DevOps Engineers works with designers and the IT team to manage the code discharges. They are either designer who gets inspired by deployment and network operations or system admins who have an interest in scripting and coding and move into the development side where they can planning of testing and deployment.

In DevOps, there is a need to have a continuous and gradual change in the code so that testing and deployment are conceivable. It probably won’t be persistently feasible for DevOps Engineers to do the coding from the start again and again; in that case, they need to know about it. There is a need for DevOps Engineers to associate different components of coding alongside libraries and programming advancement packs and incorporate different parts of SQL data management or various messaging tools for running programming release and deployments with OS and the production foundation. This article walks you through the skills required to be a DevOps Engineer:

1. Knowledge of Prominent Automation Tools

DevOps is continually evolving. To guarantee that your DevOps abilities are up to the mark, you should keep yourself updated with the best DevOps tools. These DevOps tools facilitate faster bug fixes and improved operational support, along with increased team flexibility and agility. They result in happier and more engaged teams and promote cross-skilling, self-improvement and collaborative working. The top DevOps tools are:

a) Bamboo: Bamboo has numerous pre-assembled functionalities that will assist you to automate your delivery pipeline, from builds to deployment. you needn’t bother with that numerous modules with Bamboo, as it does numerous things out-of-the-box with fewer yet more efficient modules.

b) Docker: Docker has been one of the most significant DevOps apparatuses out there. Docker has made containerisation mainstream in the tech world, mostly because it makes disseminated development conceivable and computerises the deployment of your applications. It separates applications into discrete holders, so they become convenient and increasingly secure.

c) Git: Git is one of the most renowned DevOps tools and is extensively used across the DevOps industry. It’s a distributed source code management tool that is highly appreciated by remote team members, freelancers, and open-source contributors. Git enables you to track the progress of your development work.

d) Jenkins: It is a reliable and most trusted automation tool for a great number of DevOps teams across the globe. It’s an open-source CI/CD server that enables the engineers’ to mechanise various phases of the delivery pipeline. Its vast plugin ecosystem has made it a very renowned and popular tool. As of now, it offers more than 1,000 plugins and still counting, and so it integrates with majority DevOps tools.

e) Raygun: Spotting bugs and finding execution issues is a fundamental need of the DevOps procedure. Raygun is an application execution observing tool that can assist you with discovering bugs and find execution issues through continuous checking.

f) Gradle: Gradle is a developer fabricated tool that is utilized by tech-biggies like Google to assemble applications and is displayed in a manner that is extensible in most elementary ways. For instance, Gradle can be utilized for native development with C/C++ and can likewise be extended to cover other programming languages and platforms.

g) Ansible: Ansible is an open-source application development, config management, and programming provisioning tool that can run on UNIX-based frameworks just as Windows-based frameworks. This DevOps tool designs a framework for software development and furthermore automatic deployment and delivery.

h) Kubernetes: While the Docker permits you to run applications in compartments, Kubernetes goes above and beyond by permitting engineers to run holders in a group in a protected way. With Kubernetes, designers can consequently oversee, screen, scale, and convey cloud-native applications. Kubernetes works as an amazing orchestrator that oversees communication among units and directs them as a group.

Puppet:

A puppet is a renowned tool utilized for configuration management. It is an open-source stage that has a decisive language depicting its framework arrangement. It can run on an assortment of frameworks, including Unix-based frameworks, IBM centralized server, macOS Servers, Cisco switches, and Microsoft Windows. It is basically used to pull strings on various application servers without a moment’s delay.

Elk Stack

Elk Stack is a mix of three open-source ventures – Elasticsearch, Logstash, and Kibana that is helpful to gather bits of knowledge into your log information. With its downloads exceeding millions, Elk Stack is one of the most well-known management platforms. It is a superb DevOps tool for associations that need centralized logging framework. It accompanies a ground-breaking and flexible innovation stack that can streamline the outstanding burden of tasks and furthermore offer you business insights for no extra cost.

2. Programming Skills and a basic understanding of Scripting Languages

A DevOps Engineer need not be a coding expert but must have the fundamental knowledge of coding and scripting. These languages are mostly utilized in designing the automation processes and to achieve continuous integration/continuous delivery (CI/CD). Top DevOps Programming Languages are:

C: In this internet era, the majority of the code is written in C, and different languages reuse a significant number of its modules to facilitate the programming experience. Learning C is substantial so as to have the elementary knowledge of coding and to work on KVM and QEMU ventures.

JavaScript: The entire world wide web is the offspring of JavaScript. Many of the most well-known systems and libraries are written in JavaScript, from Angular to React and Node. Back end execution isn’t the only thing that this language brings to the table: the monstrous network of engineers implies that there’s consistently help accessible on GitHub or Stack Overflow. JavaScript is a sure thing for engineers.

Python: It has been utilized to fabricate cloud infrastructure tasks and assists web applications through systems like Django. Python is an agreeable all-purpose with a wide scope of utility. Python additionally upholds great programming rehearses through its elaborate prerequisites, which guarantees that code composed by one individual will be understandable to another- – a significant element in a DevOps world, where visibility should be constant.

Ruby: Ruby advantages from an enormous assortment of community-produced modules that anybody can incorporate into applications to add usefulness without composing new code themselves. It empowers an entirely adaptable way to deal with programming and doesn’t anticipate that designers should adopt a specific strategy to compose code.

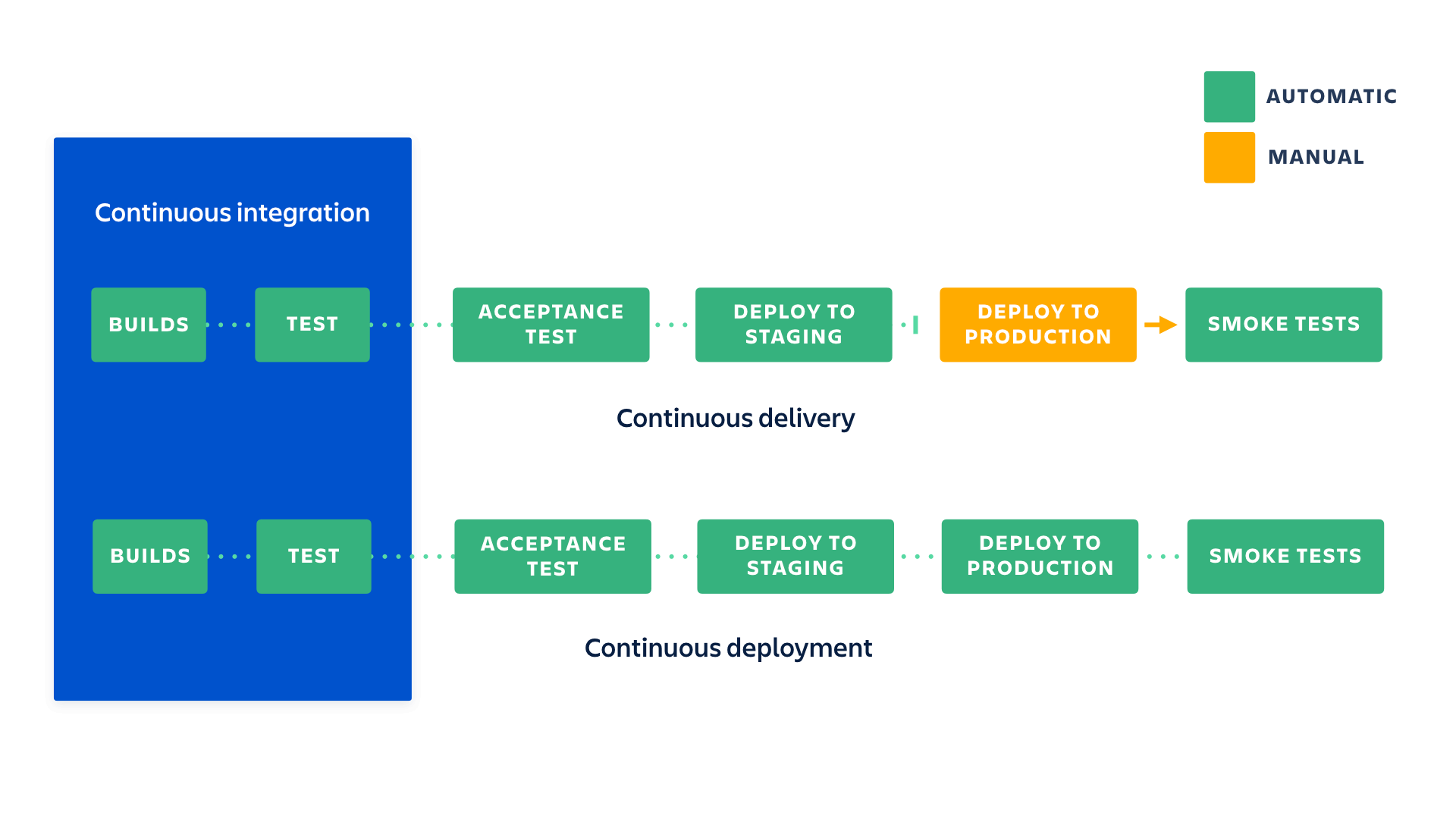

3. CI/CD (Continuous Integration/Continuous Delivery)

Information on different automation tools isn’t sufficient as you should also know where to utilize these. These automation tools ought to be utilized so as to encourage Continuous Integration and Continuous Delivery. Continuous integration and Continuous Delivery are the procedures where your development squad includes constant code changes that are pushed in the principle branch while guaranteeing that it doesn’t affect any progressions made by designers working parallelly.

4. Software Security

DevSecOps (Security DevOps) has emerged as one of the tech buzzwords in the previous year for a reason being that DevOps helps in creating and deploying programs way more quickly, it likewise makes a lot of vulnerabilities, since security groups can’t stay aware of the quicker cycle. Basically, not just excellent code but bugs and malware can also be sent a lot quicker at this point. Presenting DevOps without having culminated security forms in the IT-association is a catastrophe waiting to happen. Accordingly, DevOps ought to have the fundamental programming security aptitudes to have the option to bring security into the SDLC directly off the bat.

5. Efficient Testing Skills

DevOps is gigantically affected by how well testing is done in a tech-based company. You can’t robotize the DevOps pipeline if effective constant testing, the procedure of executing automatic tests, isn’t set up. Continuous testing ensures that each computerized trial gets executed the way it should, or there is a huge risk of pushing faulty code straight away to clients, which isn’t acceptable.

6. Soft Skills

In addition to the fact that DevOps requires solid abilities like coding and robotization, yet it additionally requires such delicate aptitudes as adaptability, self-inspiration, and sympathy. A DevOps engineer is somebody who constructs associations and mitigates bottlenecks, which is achieved by conversing with individuals. Correspondence and cooperation are the abilities that can represent the moment of truth for a DevOps Engineer in any association. They ought to see how the association runs, who the individuals who oversee it are, and what the association’s way of life is to abstain from making conflict focuses and limitations.

Role of a DevOps Engineer

DevOps professionals come from a multitude of IT backgrounds and begin the role in different places in their careers. Generally, the role of a DevOps engineer is not as easy as it appears. It requires looking into seamless integration among the teams, successfully and continuously deploying the code. The DevOps approach to software development requires recurring, incremental changes, and DevOps Engineers seldom code from scratch. However, they must understand the fundamentals of software development languages and be thorough with the development tools utilized to make a new code or update the existing one.

A DevOps Engineer works alongside the development team to handle the coding and scripting expected to associate the components of the code, for example, SDKs or libraries and coordinate different parts, for example, informing tools or SQL DBMS that is required to run the product discharge with OSs and generation framework. They ought to be able to deal with the IT framework as per the sustained software code devoted to multi-tenant or hybrid cloud environments. There’s a need to have a provision for required assets and for obtaining the suitable organisation model, approving the launch and checking execution. DevOps Engineers could either be the network engineers who have moved into the coding area or the designers who have moved into operations. In any case, it is a cross-function job that is seeing an immense hike in the manner software is developed and deployed in object-critical applications.

Conclusion:

DevOps isn’t very hard to understand. It just requires a person to have a ton of hard and soft skills. DevOps specialists ought to have the option to do a great deal on the tech side of things — from utilizing explicit DevOps devices and overseeing framework in the cloud to composing secure code and checking mechanization tests. They ought to be people who are passionate about what they do and who are prepared to convey the gigantic measures of significant worth. They ought to be interested and proactive, compassionate and self-assured, solid and reliable. They ought to have the option to place clients’ needs over their teams’ needs and make a move when required. The DevOps job isn’t simple, yet it is absolutely justified, despite all the trouble to turn into a DevOps. To take things off the ground, check what number of the DevOps aptitudes highlighted in this article you have. On the off chance that you come up short on some of them, be proactive and start adapting at the present time!