When we talk about the present, we don’t realize that we are actually talking about yesterday’s future. And one such futuristic technologies to talk about is Machine learning app development or use of AI in mobile app development services. Your next seven minutes will be spent on learning how Machine Learning technology is disrupting today’s mobile app development industry.

“Signature-based malware detection is dead. Machine learning based Artificial Intelligence is the most potent defence the next-gen adversary and the mutating hash.” ― James Scott, Senior Fellow, Institute for Critical Infrastructure Technology

The time of generic services and simpler technologies is long gone and today we’re living in a highly machine-driven world. Machines which are capable of learning our behaviors and making our daily lives easier than we ever imagined possible.

If we go deeper into this thought, we’ll realize, how sophisticated a technology has to be for learning on its own any behavioral patterns that we subconsciously follow. These are not simple machines, these are more than advanced.

Technological realm today is fast-paced enough to quickly switch between Brands and Apps and technologies if one happens to not fulfill their needs in the first five minutes of them using it. This is also a reflection upon the competition this fast pace has led to. Mobile app development companies simply cannot afford to be left behind in the race of forever evolving technologies.

Today, if we see, there is machine learning incorporated in almost every mobile application we decide to use. For instance, our food delivery app will show us the restaurants which deliver the kind of food we like to order, our on-demand taxi applications show us the real-time location of our rides, time management applications tell us what is the most suitable time for to complete a task and how to prioritize our work. The need of worrying over simple, even complicated things is ceasing to exist because our mobile applications and our smartphone devices are doing that for us.

Looking at the stats, they will show us that

- AI and Machine Learning-driven apps is a leading category among funded startups

- Number of businesses investing in ML is expected to double over the next three years

- 40% of US companies use ML to improve sales and marketing

- 76% of US companies have exceeded their sales targets because of ML

- European banks have increased product sales by 10% and lower churn rates by 20% with ML

The idea behind any kind of business is to make profits and that can only be done when they gain new users and retain their old users. It might be a bizarre thought for mobile app developers but it is as true as it can be that Machine learning app development has the potential of turning your simple mobile apps into gold mines. Let us see how:

How Machine Learning Can Be Advantageous For Mobile App Development?

- Personalisation: Any machine learning algorithm attached to your simpleton mobile application can analyze various sources of information from social media activities to credit ratings and provide recommendations to every user device. Machine learning web app, as well as mobile app development, can be used to learn.

- Who are your customers?

- What do they like?

- What can they afford?

- What words they’re using to talk about different products?

Based on all of this information, you can classify your customer behaviors and use that classification for target marketing. To put simply, ML will allow you to provide your customers and potential customers with more relevant and enticing content and put up an impression that your mobile app technologies with AI are customized especially for them.

To look at a few examples of big brands using machine learning app development to their benefits,

- Taco Bell as a TacBot that takes orders, answers questions and recommends menu items based on your preferences.

- Uber uses ML to provide an estimated time of arrival and cost to its users.

- ImprompDo is a Time management app that employs ML to find a suitable time for you to complete your tasks and to prioritise your to-do list

- Migraine Buddy is a great healthcare app which adopts ML to forecast the possibility of a headache and recommends ways to prevent it.

- Optimise fitness is a sports app which incorporates an available sensor and genetic data to customise a highly individual workout program.

- Advanced Search: Machine learning app ideas let you optimize search options in your mobile applications. ML makes the search results more intuitive and contextual for its users. ML algorithms learn from the different queries put by customers and prioritize the results based on those queries. In fact, not only search algorithms, modern mobile applications allow you to gather all the user data including search histories and typical actions. This data can be used along with the behavioural data and search requests to rank your products and services and show the best applicable outcomes.

Upgrades, such as voice search or gestural search can be incorporated for a better performing application.

- Predicting User Behavior: The biggest advantage of machine learning app development for marketers is that they get an understanding of users’ preferences and behavior pattern by inspection of different kind of data concerning the age, gender, location, search histories, app usage frequency, etc. This data is the key to improving the effectiveness of your application and marketing efforts.

![]()

Amazon’s suggestion mechanism and Netflix’s recommendation works on the same principle that ML aids in creating customized recommendations for each individual.

And not only Amazon and Netflix but mobile apps such as Youbox, JJ foodservice and Qloo entertainment adopt ML to predict the user preferences and build the user profile according to that.

- More Relevant Ads: Many industry experts have exerted on this point that the only way to move forward in this never-ending consumer market can be achieved by personalizing every experience for every customer.

“Most analog marketing hits the wrong people or the right people at the wrong time. Digital is more efficient and more impactful because it can hit only the right people, and only at the right time.” – Simon Silvester, Executive Vice President Head of Planning at Y&R EMEA

According to a report by The Relevancy Group, 38% of executives are already using machine learning for mobile apps as a part of their Data Management Platform (DMP) for advertising.

With the help of integrating machine learning in mobile apps, you can avoid debilitating your customers by approaching them with products and services that they have no interest in. Rather you can concentrate all your energy on generating ads that cater to each user’s unique fancies and whims.

Mobile app development companies today can easily consolidate data from ML that will in return save the time and money went into inappropriate advertising and improve the brand reputation of any company.

For instance, Coca-Cola is known for customizing its ads as per the demographic. It does so by having information about what situations prompt customers to talk about the brand and has, hence, defined the best way to serve advertisements.

- Improved Security Level: Besides making a very effective marketing tool, machine learning for mobile apps can also streamline and secure app authentication. Features such as Image recognition or Audio recognition makes it possible for users to set up their biometric data as a security authentication step in their mobile devices. ML also aids you in establishing access rights for your customers as well.

Apps such as ZoOm Login and BioID use machine learning for mobile apps to allow users to use their fingerprints and Face IDs to set up security locks to various websites and apps. In fact, BioID even offers a periocular eye recognition for partially visible faces.

ML even prevents malicious traffic and data from reaching your mobile device. Machine Learning application algorithms detect and ban suspicious activities.

How are developers using the Power of Artificial intelligence In Mobile Application development?

After learning that what is machine learning app, let us take a look at the advantages of AI-powered mobile apps which are never-ending for Users as well as for mobile app developers. One of the most sustainable uses for developers is that they can create hyper-realistic apps using Artificial Intelligence.

The best usages can be:

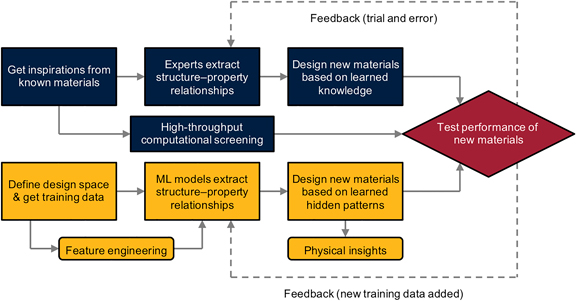

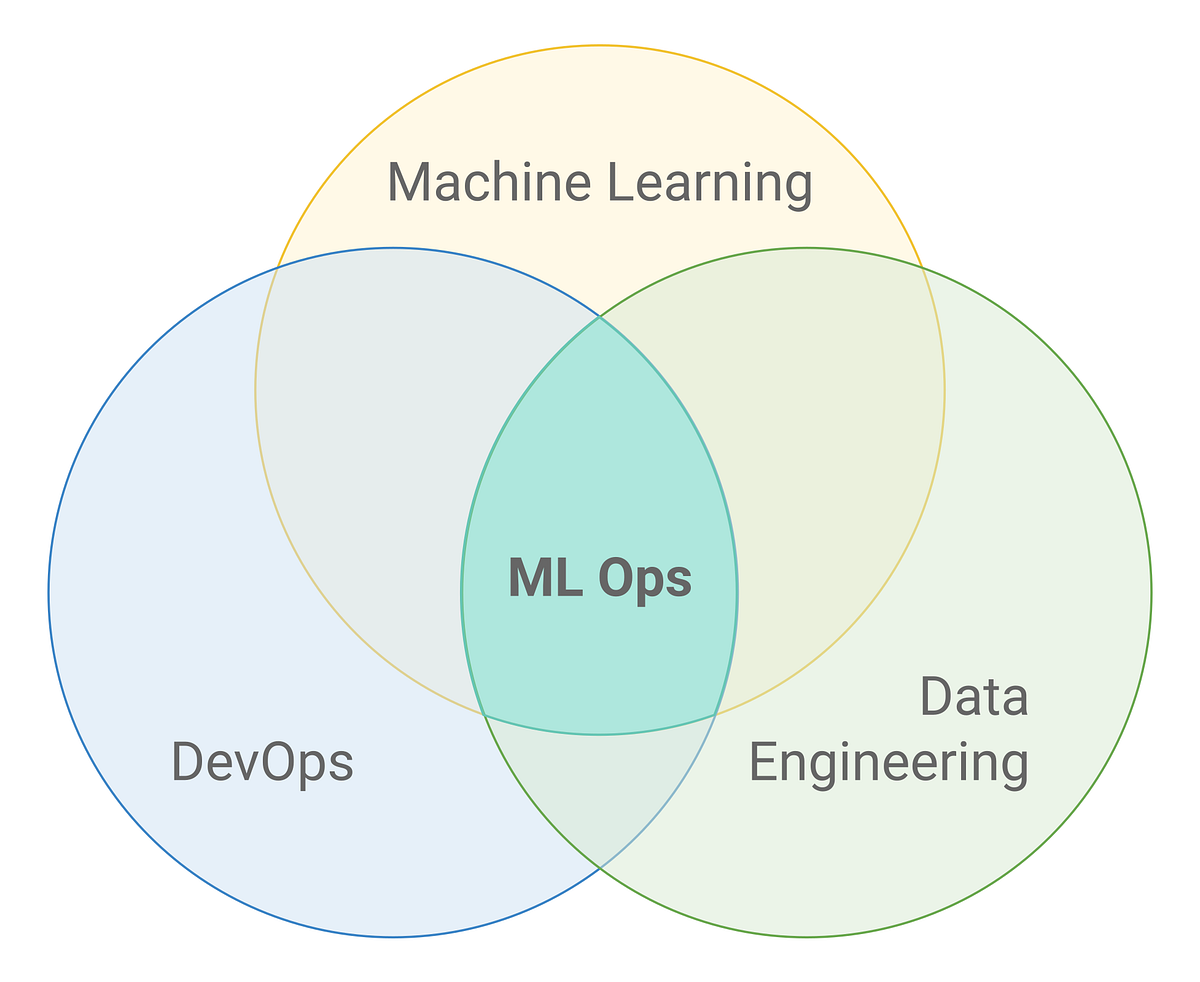

- Machine learning can be incorporated as a part of Artificial Intelligence in mobile technology.

- It can be used for predictive analysis which is basically the processing of large volumes of data for predictions of human behaviour.

- Machine learning for mobile apps can also be used for assimilating security and filtering out harmful data.

Machine learning empowers an optical character recognition (OCR) application to identify and remember the characters which might have been skipped from the developer’s end.

The concept of machine learning also stands true for Natural Language Processing (NLP) apps. So besides reducing the development time and efforts, the combination of AI and Quality Assurance also reduces the update and testing time phases.

What Are The Challenges with Machine Learning and their solutions?

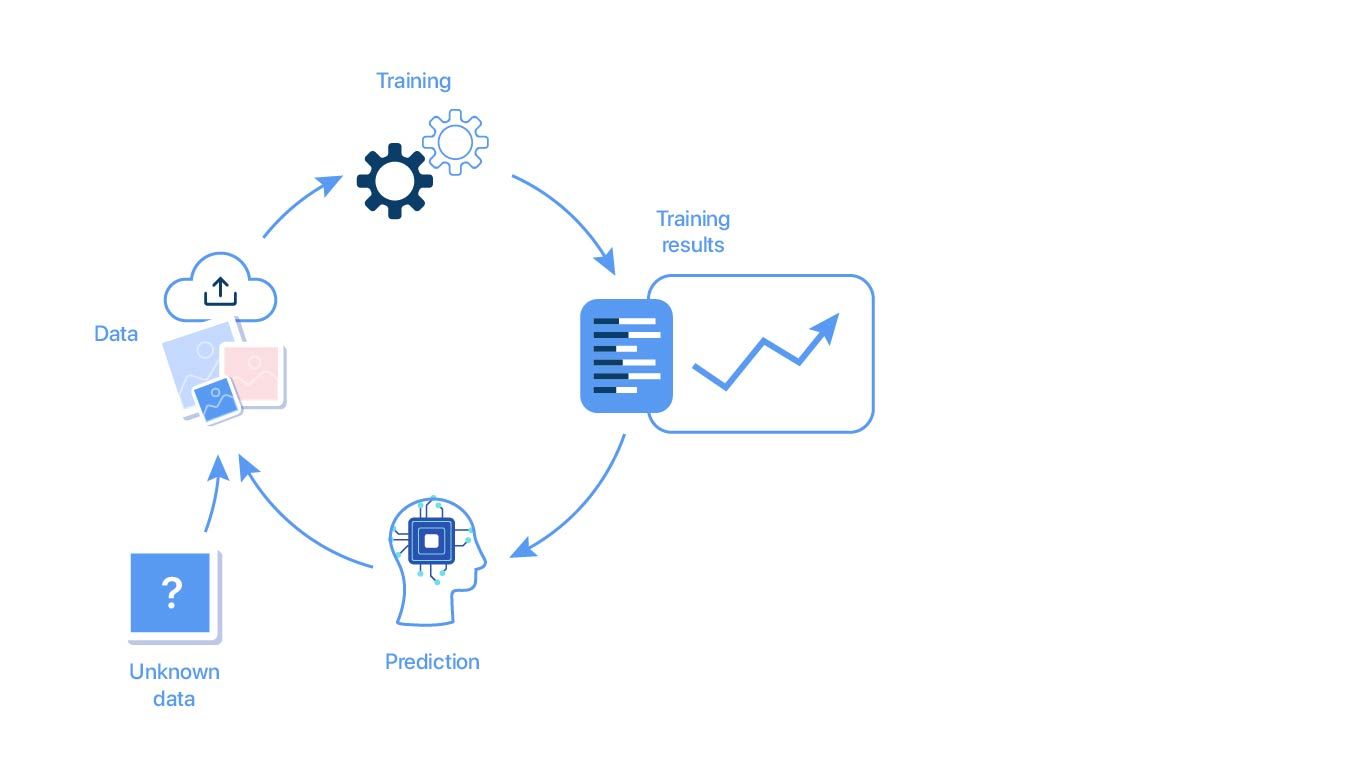

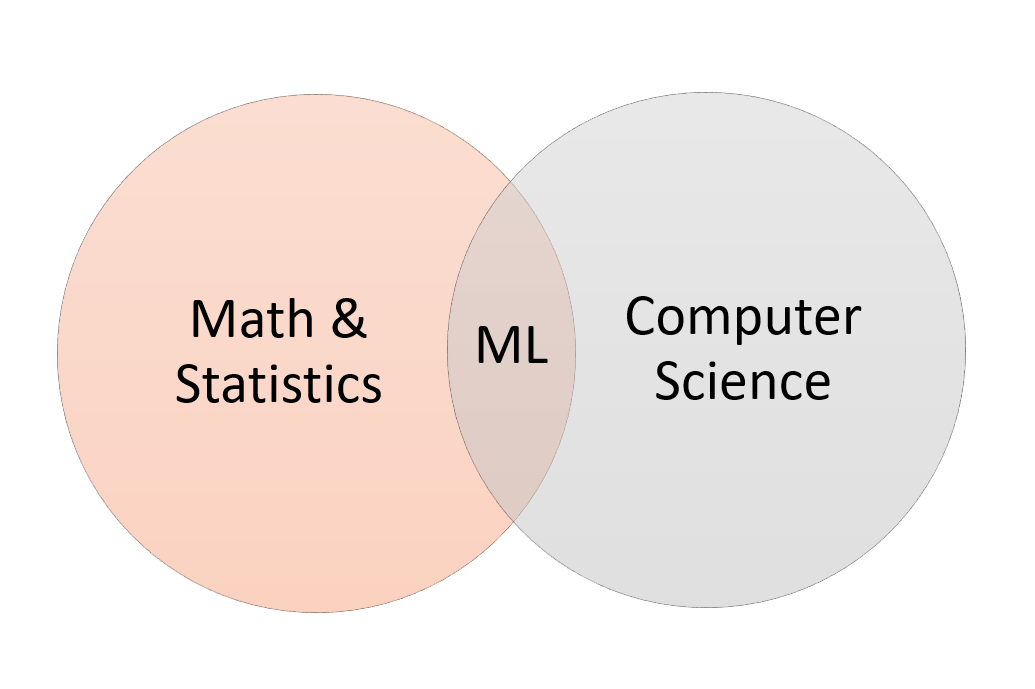

Like any other technology, there is always a series of challenges attached to machine learning as well. The basic working principle behind machine learning is the availability of enough resource data as a training sample. And as a benchmark of learning, the size of training sample data should be large enough so as to ensure a fundamental perfection in machine learning algorithms.

In order to avoid the risks of misinterpretation of visual cues or any other digital information by the machine or mobile application, following are the various methods that can be used:

- Hard Sample mining – When a subject consists of several objects similar to the main object, the machine is ought to confuse between those objects if the sample size provided for analysis as the example if not big enough. Differentiating between different objects with the help of multiple examples is how the machine learns to analyse which object is the central object.

- Data Augmentation – When there is an image in question in which the machine or mobile application is required to identify a central image, there should be modifications made to the entire image keeping the subject unchanged, thereby enabling the app to register the main object in a variety of environments.

- Data addition imitation – In this method, some of the data is nullified keeping only the information about the central object. This is done so that the machine memory only contains the data regarding the main subject image and not about the surrounding objects.

Which are the Best Platforms for the development of a mobile application with Machine Learning?

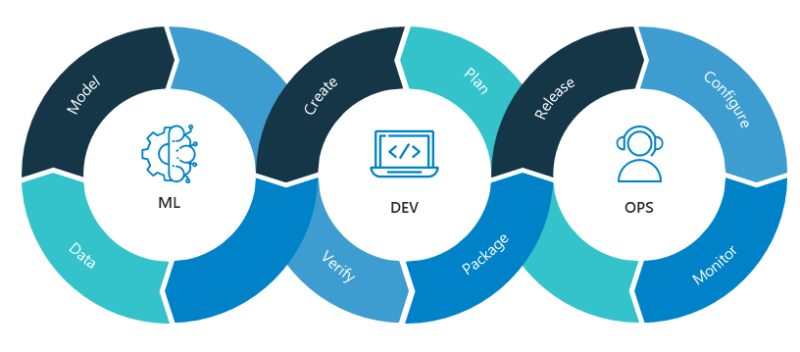

![]()

- Azure – Azure is a Microsoft cloud solution. Azure has a very large support community, and high-quality multilingual documents, and a high number of accessible tutorials. The programming languages of this platform are R and Python. Because of an advanced analytical mechanism, the developers can create mobile applications with accurate forecasting capabilities.

- IBM Watson – The main characteristic of using IBM Watson, is that it allows the developers to process user requests comprehensively regardless of the format. Any kind of data. Including voice notes, images or printed formats is analyzed quickly with the help of multiple approaches. This search method is not provided by any other platform than IBM Watson. Other platforms involve complex logical chains of ANN for search properties. The multitasking in IBM Watson places an upper hand in the majority of the cases since it determines the factor of minimum risk.

- Tensorflow – Google’s open-source library, Tensor, allows developers to create multiple solutions depending upon deep machine learning which is deemed necessary to solve nonlinear problems. Tensorflow applications work by using the communication experience with users in their environment and gradually finding correct answers as per the requests by users. Although, this open library is not the best choice for beginners.

- Api.ai – It is a platform that is created by the Google development team which is known to use contextual dependencies. This platform can be very successfully used to create AI based virtual assistants for Android and iOS. The two fundamental concepts that Api.ai depends on are – Entities and Roles. Entities are are the central objects (discussed before) and Roles are accompanying objects that determine the central object’s activity. Furthermore, the creators of Api.ai have created a highly powerful database that strengthened their algorithms.

- Wit.ai – Api.ai and Wit.ai have largely similar platforms. Another prominent characteristic of Wit.ai is that it converts speech files into printed texts. Wit.ai also enables a “history” features which can analyze context-sensitive data and therefore, can generate highly accurate answers to user requests and this is especially the case of chatbots for commercial websites. This is a good platform for the creation of Windows, iOS or Android mobile applications with machine learning.

Some of the most popular apps such as Netflix, Tinder, Snapchat, Google maps and Dango are using AI technology in mobile apps and machine learning business applications for giving their users a highly customised and personalised experience.

Machine learning to benefit mobile apps is the way to go today because it loads your mobile app with enough personalization options to make it more usable, efficient and effective. Having a great concept and UI is one pole of the magnet but incorporating machine learning is going a step forward to provide your users with the best experiences.