Continuous delivery yields a host of IT and operational benefits, including proven competitive advantages like faster deployment times, responses to customer feedback, and bug fixes. But one aspect that tends not to make it on the marquee list of benefits — and should probably be headlining it — is security.

It’s really quite simple — with continuous delivery, cruical security enhancementst, updates and fixes to applications can be pushed live in a quick and timely manner to get the enhanced security into deployment. What could be better than that?

Traditional slow and batch-oriented waterfall approach

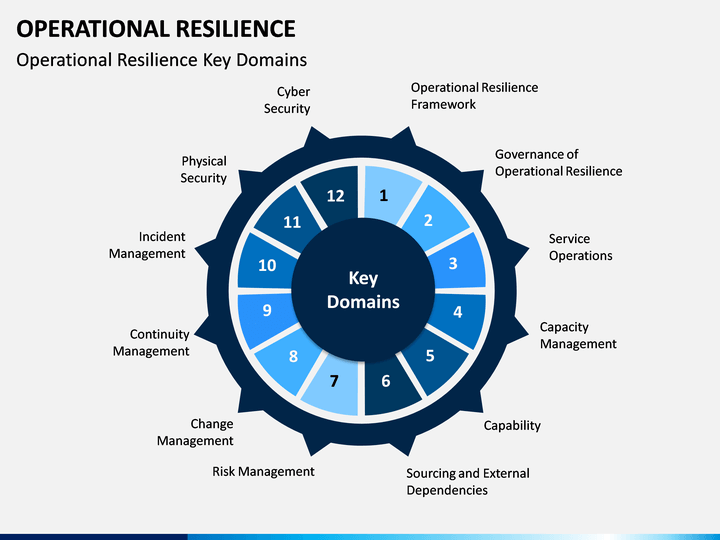

Typically, in the traditional ITSM approach, when a security incident happens, it is captured and consolidated with other requirements to be addressed in the next application release. Sometimes an urgent patch release can be delivered sooner, in a few weeks – if it can rapidly progress through the cycle of fix, regression testing, release preparation, release testing and maintenance. But if the fix requires a major release, it could be many months until it can be made available, and in most cases, the only thing you can do in the meantime is document the incidents.

That’s too slow.

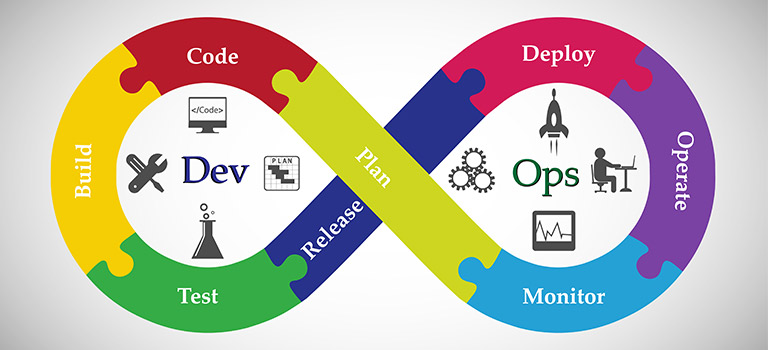

A better, faster way — continuous delivery and DevSecOps

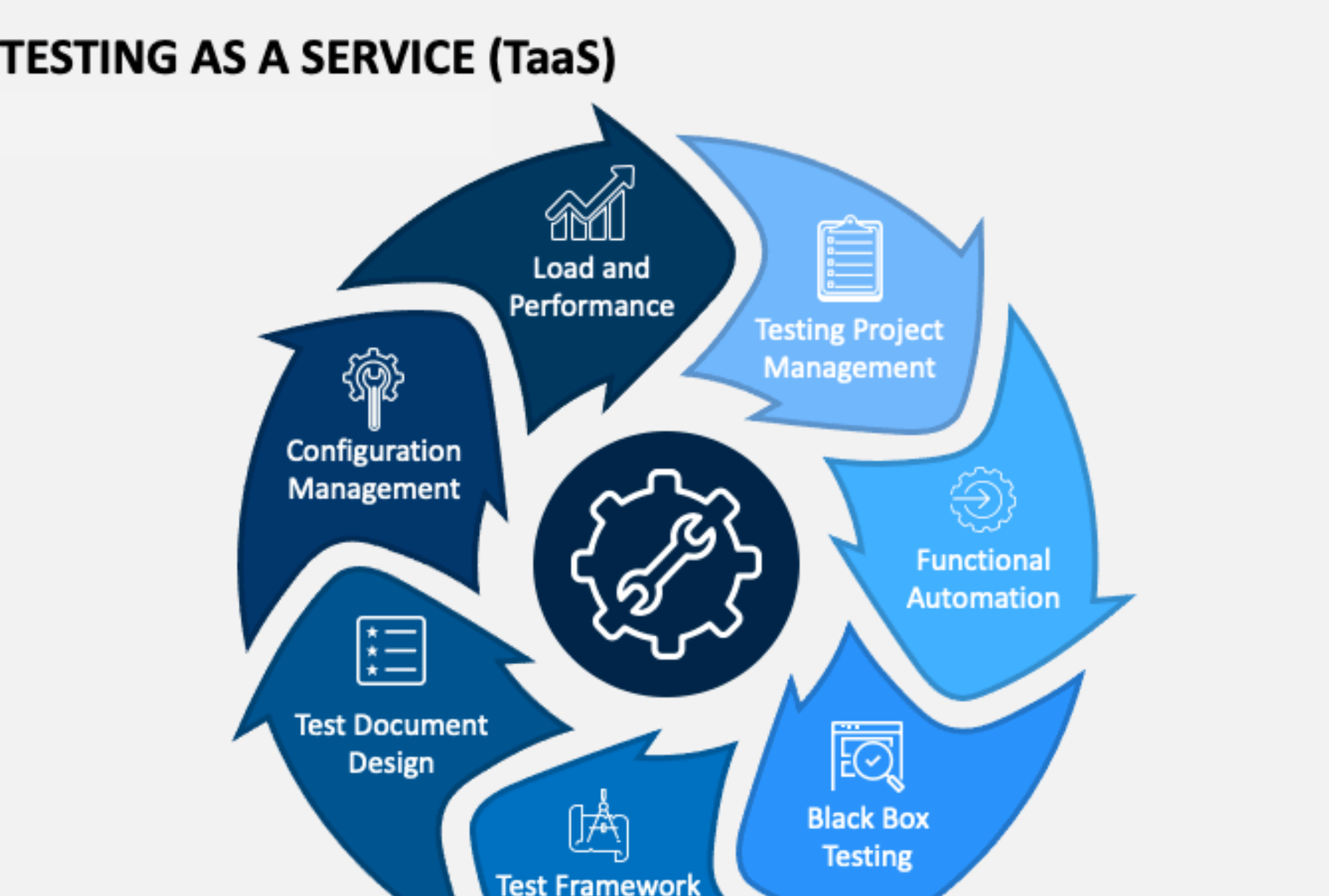

A modern service management approach combining continuous delivery and DevSecOps supports the core tenets of information security: data confidentiality, integrity, and availability. A dedicated team provides continuous delivery by making small or incremental changes every day or multiple times a day. DevSecOps secures the continuous integration and delivery pipeline, as well as the content that’s coming through that pipeline.

You gain three key advantages:

Speed. Continuous delivery and DevSecOps dramatically improve security because they allow malicious attacks and bugs to be addressed as soon as they’re identified, not just added to some logbook. And in many cases, the window for action falls from between six and eight weeks down to minutes. Thus, far fewer incidents become problems that impact IT and business operations.

Consistency. IT teams working under traditional ITSM often worry that the continuous delivery and DevSecOps approach will create more opportunity for mistakes and bugs because more changes are happening more often. In practice, the exact opposite is true.

Flexibility. A DevSecOps approach simplifies the introduction of blue/green canary releases — implementing a new release while continuing to operate the prior release — into your delivery capacity. This allows you to redirect modest amounts of traffic to your new release, facilitating the identification of potential issues without drastically impacting many users. It also lets you rapidly shift all traffic back to the current release should a problem be identified.

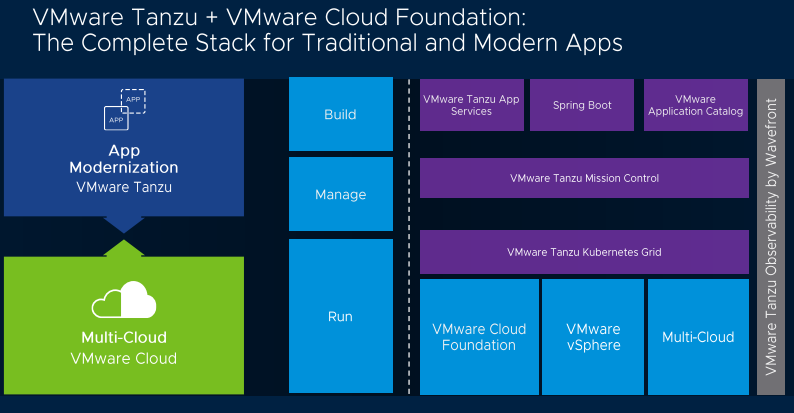

The modern approach offers a variety of powerful tactics for quickly countering attacks. For example, workloads can be designed to move between cloud providers using Pivotal Cloud Foundry, containers or other homogenizing technology that offers the flexibility to move systems from one cloud provider to another. If there is a big denial of service attack in one provider, you could redeploy to another provider or back to a private data center with the click of a button. If an attack is focused on a particular IP, you recreate the environment at a new IP and block the other one completely. Structuring applications in this kind of push-button deployment mode creates opportunities for all sorts of similar scenarios.

How to move forward

Realizing the security benefits that come from implementing continuous integration and DevSecOps may require a deep, cultural change in the way your company builds and delivers software. Increasingly, security will become a secondary competency of developers, with risk ownership devolving from the central security team to application owners. In this new mode of operating, we need to make sure the right guard rails are in place and that the central security team provides necessary mentorship and support.

It’s a challenge, no question. But worth the rewards.

Successfully navigating some of these changes is explored in a recent post called “How to jump start your enterprise digital transformation.” A seven-page paper, DevSecOps: Why security is essential, is another good resource.