As blockchain is evolving, many startups and developers are exploring and dissecting the potential of the technology in all aspects. They are not solely taking interest in knowing how the technology can revamp their existing business models.But, are also entertaining all the originating buzzwords. While some are using them as synonyms, others are taking an interest in finding the differences between them.

One such newly originated name is Distributed Ledger Technology (DLT).

What is DLT has become one of the buzz questions of the current time, with what makes it similar/different from Blockchain being the close second

Let’s cover both in this article. But first, let’s have a sneak peek of Blockchain vs DLT by considering a platform example of both, i.e, Ethereum (Blockchain) and R3 Corda (DLT).

![]() Now while this would have given you some insights on the difference between blockchain and DLT, let’s jump into the decentralized world and study them in detail – starting with the basics of Blockchain technology.

Now while this would have given you some insights on the difference between blockchain and DLT, let’s jump into the decentralized world and study them in detail – starting with the basics of Blockchain technology.

A simple definition to Blockchain technology

Blockchain is a decentralized, distributed and often public database type where data is saved in blocks, such that the hashcode present in any block is created using the data of the previous block. These blocks offer a complete set of characteristics like transparency, immutability, and scalability that makes every brand and developer interested in investing their time and effort into Blockchain development guide.

Advantages of Blockchain:

- Blockchain technology enables businesses to verify any transaction without involving any intermediaries.

- Since transaction stored in the blocks are stored on millions of devices participating in the Blockchain ecosystem, the risk of data recovery is minimal.

- As consensus protocols are used to verify every entry, there is no chance of double entry or fraud.

- Another benefit of blockchain technology is that it offers transparency in the network, which makes it easier for anyone to be familiar with transactions in real-time.

The technology came into limelight as backend support force for cryptocurrencies, but soon made its place in different business verticals including Healthcare, Travel, Real Estate, Retail, Finance, and On-demand. A complete information about different industries that Blockchain is disrupting can be taken from this image:-

With this attended to, let’s turn towards DLT.

A Brief Introduction to Distributed Ledger Technology (DLT)

The answer to what is Distributed Ledger technology (DLT) is that it is a digital system used for storing the transaction of assets, even when the data is stored at multiple places simultaneously. It might sound like a traditional database, but is different because of the fact that there is no centralized storage place or administration functionality. Meaning, every node of the ledger processes and validates every item, and this way, contribute to generating a record of each item and building a consensus on each item’s veracity.

A timeline of DLT’s movement can be seen in this image –

The concept is attracting almost every app development company with a complete set of advantages as shared below.

Advantages of DLT:-

- In DLT, data is 100% tamper proof till the database ledger is distributed.

- It offers highly secure and trustworthy experience.

- A decentralized private distributed network enhances the robustness of the system and assures continuous operation without any interruption.

Now, as we have looked into what the two terms mean, let’s look into the DLT and Blockchain relationship before turning towards the comparison of two.

Relation between Blockchain and DLT

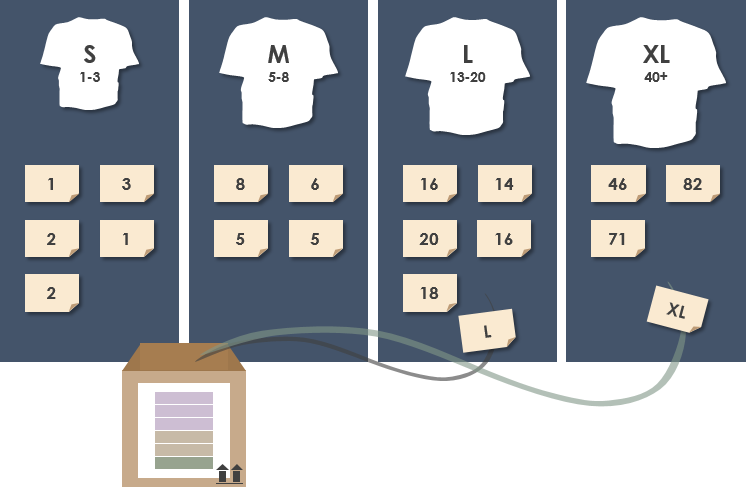

As depicted in the above image, Blockchain is just a piece of the vast ecosystem of DLT. It is a type of DLT where records are stored in blocks after being validated cryptographically. That implies, a hash created using the data stored in a block is fed in the next block added such that it gives an impression of a chain.

“Every blockchain is a distributed ledger, but not every distributed ledger is a blockchain.”

Now, while this might be giving you a rush to compare Blockchain and DLT at once, let’s take a twisted path. Here, we will first learn about other types of DLT besides Blockchain, and then move to the core part of the article, i.e, Blockchain vs DLT.

Here are the popular forms of DLT that exists apart from Blockchain.

1. Holochain

Holochain, in simplified terms, is a type of DLT which does not rely upon consensus model or on the concept of tokenization.

Here, each participating node has its own secure ledger and can act independently, while also interacting with other devices on the network to meet the basic needs of decentralization. This way, it lets you build more customized and scalable solutions than what Blockchain offers.

2. Hashgraph

Another form of DLT that exists in the market is Hashgraph. It is basically a patented algorithm that has the potential of delivering all the benefits of Blockchain (decentralization, security, and distribution), but without compromising at transaction speed rate. Something for which it relies upon the concept of Gossip about Gossip protocol and Virtual voting technique.

One real-life implementation of Hashgraph that has proven to hold the potential of becoming a replacement of Blockchain is Hedera Hashgraph, about which you can learn more in this blog.

3. Direct Acyclic Graph (DAG)

Direct Acyclic Graphs (DAGs) or you can say Tangle is also one of the prime types of DLT that pertains in the tech world.

Under this concept, multiple chains of nodes are created and managed at the same time and are interconnected to one another. They, unlike Blockchain, exist both in serial and parallel form.

.

Factors to Consider While Comparing Blockchain and DLT

1. Consensus model

The foremost factor to focus upon while checking into Blockchain vs DLT is the consensus mechanism.

Since only a limited number of nodes participate in the case of DLT, there is no need for any consensus. But, the same is not true in the case of Blockchain where anyone can participate and contribute to the addition of a new block to the chain.

2. Block structure

Another factor that you must keep a watch on while differentiating between Blockchain and DLT is block structure.

While blocks are added in the form of a chain in a Blockchain, they can be organized in different forms in the case of Distributed Ledger technology.

3. Tokens

Tokens, i.e, the programmable assets governed by Smart Contracts or underlying distributed ledger, is also one of the prime comparing factors in Blockchain vs DLT war.

While tokens are must to consider while working with Blockchain technology, they are not required when dealing with DLT. And the reason behind is that only invited (limited) nodes are allowed to participate and validate any transaction in DLT environment which reduces the size of complete ecosystem.

However, you might be requiring tokens when you wish to perform block spamming or work on anti-spamming detection process.

4. Sequence

In Blockchain environment, all the blocks are arranged in a particular sequence, i.e, in a serial mode. However, there is no as such constraint when talking about DLT. Blocks are organized in different ways in the case of DLTs.

5. Efficiency

A DLT can complete a significantly high number of transactions in a minute than what is possible by Blockchains. So, it delivers higher efficiency with minimal cost than Blockchain-based solutions.

An outcome of this is that today, various Blockchain development companies are looking ahead to enter into the DLT ecosystem.

6. Trustability

Another factor that you can consider to see the difference between Blockchain and DLT is Trustability.

In the case of DLT, trust among participating nodes is high. And it is even higher when the corporate initiates building their own internal blockchain or organizing a consortium. Also, the censorship resistance in this template is low since they can be centralized and/or private.

However, it is not so in the case of Blockchain.

In Blockchain ecosystem, the censorship resistance is too high with one vote per PC. But, with the progressive concentration of mining with upgraded hash power in the hands of fewer decision makers, the chances of trustability is low.

7. Security

To access data stored in a blockchain, users have to employ a key. If they lose the key, they would lose access to their account and funds. A real-life example of which is that the loss of access on nearly $145M of bitcoins and digital assets on the death of a cryptocurrency exchange CEO.

However, such situations are not majorly possible in the case of DLT, since the data is distributed, encrypted, and synchronized across multiple ports.

8. Real-life implementations

When talking about the comparison of blockchain and distributed ledger technology in terms of real-life implementations, blockchain leads the battle.

This is so because many entrepreneurs are slowly and gradually understanding the nature of Blockchain via some guide and use them into their traditional model for leveraging better advantages. In fact, various recognized brands like Amazon, IBM, Oracle, and Alibaba have started offering Blockchain As a Service (BaaS) solutions.

But, when it comes to Distributed Ledger technology, DLT enthusiasts and application developers have begun to explore the core of the technology. They are looking ahead to come across different use cases of DLT, but there are not many significant real-life implementations yet.

So this was all about what you should know when looking into Blockchain vs DLT. But, in case, you have some more queries or confused about which one to invest in, feel free to connect with our Blockchain consultants.