In short, functional programming is a catch-all term for a way of writing code that is focused on composing pure functions, actually using the innovations in type systems made in the last few decades, and overall being awesome.

So what’s the point? All of these things help to better understand what actually happens in our code.

And, once we do that, we gain:

- better maintainability for the codebase;

- more safe, reliable, composable code;

- the ability to manage complexity with abstractions that are borderline wizardry.

You’re a functional programmer, Harry.

As it is, functional programming is ideal for developing code for distributed systems and complex backends, but that isn’t all it can do. At Anteelo, we use it for most of our industry projects. Whether you need frontend or backend, it doesn’t matter, there is an FP language for everything nowadays.

Now that you are stoked about learning more about functional programming and have already ordered your copies of Programming Haskell on Amazon, let’s delve deeper into the details.

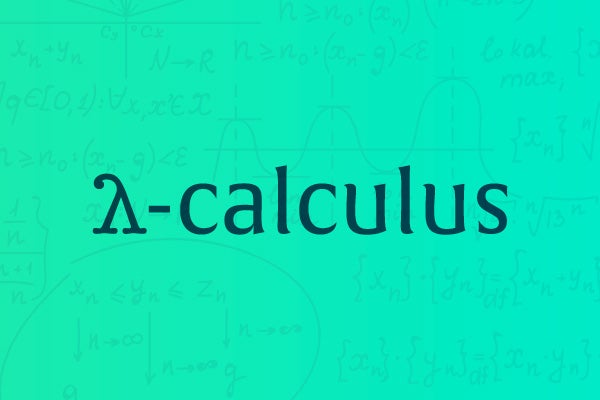

At the heart of functional programming is lambda calculus.

Introduced by the mathematician Alonzo Church in the 1930s, lambda calculus is just a way of expressing how we compute something. If you understand this one, you will gain a lot of intuition on how functional programming looks in practice.

There are only three elements in lambda calculus: variables, functions, and applying functions to variables. Here we have to think about function as a pure/mathematical function: a way of mapping members of a set of inputs to members of a set of outputs.

Even though it is a very simple tool, we can actually compose different functions and, in that way, encode any computation possible with a regular computer. (It would get unwieldy fast for anything non-trivial though, and that’s why we don’t program in it.

To further illustrate the concept, I refer you to this video of an absolute madman implementing lambda calculus in Python.

In 1950-60s, people began to encode this notion into programming languages. A good example is LISP, a kind of functional language designed by John McCarthy that keeps the overall incomprehensibility of lambda calculus while actually enabling you to do some things.

Example implementation of A* search algorithm in Racket (a dialect of LISP).

But that was only the beginning. One thing led to another, and, as we introduced such languages as ML and Miranda, the numerous permutations explored adding readability and a great type system. As a result, the 1980s saw the arrival of something beautiful – Haskell, a programming language so great that it was destined to evade mainstream for the next 30 years.

The same A* algorithm in Haskell.

We’ll return to Haskell later.

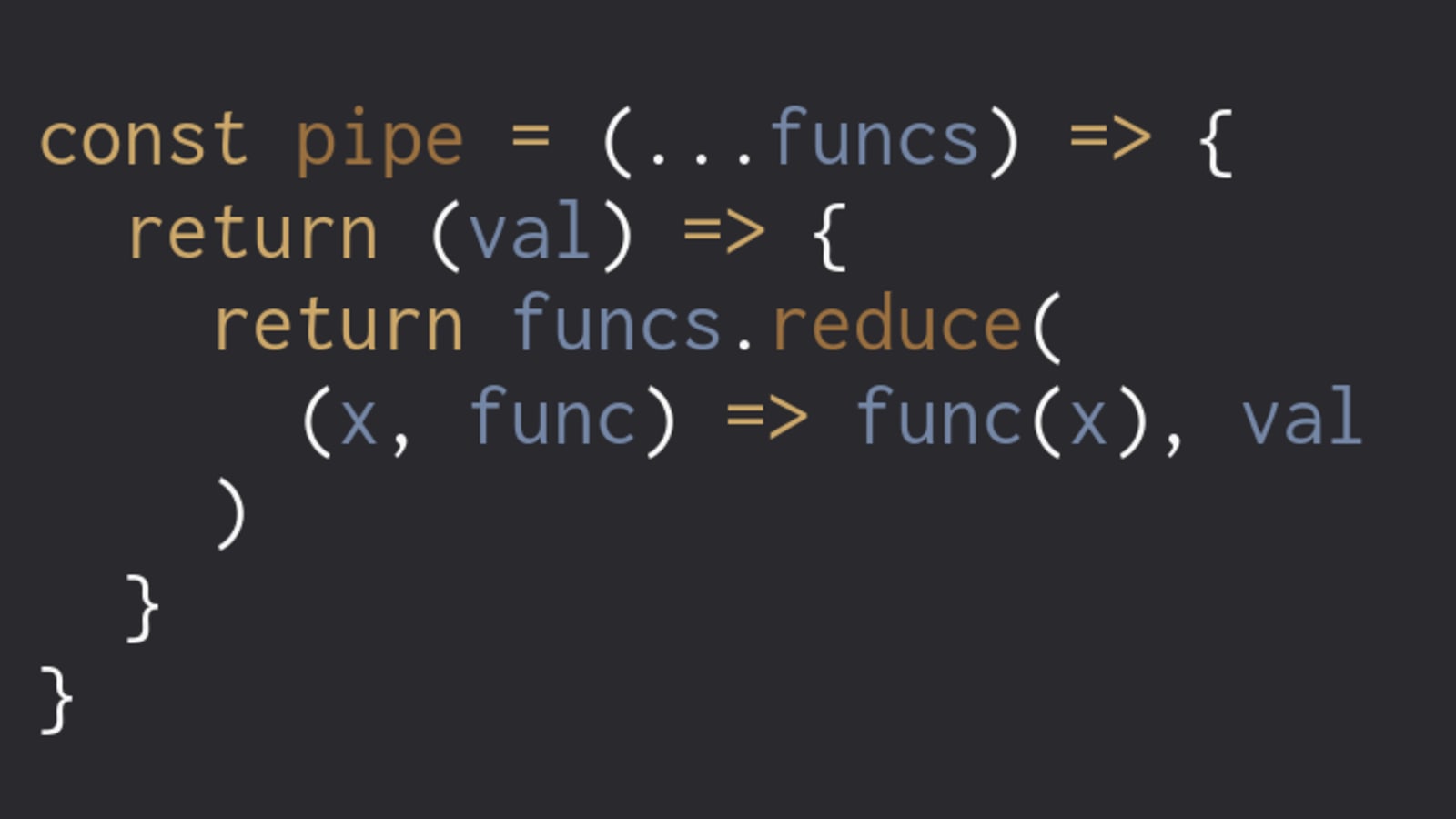

Ok, I hope I gave the intuition about how pure functions and chaining pure functions would look. What else is there?

- Immutability. This follows from pure functions. If the function has an input and gives an output, and doesn’t maintain any state, there can be no mutable data structures. Forget i++. This is for the better. Mutable data structures are a sword that looms over the developer’s head, waiting to fall at any moment. Immutability also helps when the underlying code needs to be thread-safe and therefore is a huge boon in writing concurrent/parallel code.

- All kinds of ways to handle functions. Anonymous functions, partially applied functions, and higher-order functions – these you can get in all modern programming languages. The main benefit is when we go higher up the abstraction ladder. We can introduce various kinds of design patterns such as functors, monads, and whatever-kinds-of-morphisms that we port right from category theory, one of the most powerful tools of mathematics, because… get it? Our code is a composition of mathematical functions.

There is a chance you stopped at immutability and thought: how can we accomplish anything without maintaining a global state? Isn’t it extremely awkward? Nope. We just pass the relevant state through the functions.

While it may seem unwieldy at first (and that is more because it is a new style of programming, not because of inherent complexity), functional programming abstractions help us to do it easily, For example, we can use special constructions such as state monad to pass state from function to function.

As you can see, functional programming concepts synergize well with each other. In the end, we have a self-consistent paradigm that is wonderful for anything where you would want to include an element of it.

I’ve been holding back on the greatest thing, though.

Did you know that a lot of smart people are doing Haskell & Co nowadays? Functional programming is a great way to gather/meet unappreciated talent that hasn’t yet been devoured by the corporate clutches of FAANG.

We know this from experience. Our engineers are badass, and not only on our team page.

So if there is a project you want to kick off, and you want to kick it off with a team that will rock your socks off, I will list a few functional programming languages with which to attract next-level developers.

Haskell was developed back in times far, far away when the FP community faced the situation of there being too many goddamn functional programming languages with similar properties. Turns out when you bring a lot of smart people together, something can happen. But more about that in our Haskell history post.

Since then, Haskell has established itself in certain fields, such as:

- Finance

- Biotech

- Blockchain

- Compilers & DSLs

Many large companies have projects of various sizes that use Haskell.

Haskell is a combination of various ideas that, brought together, have created a being of utter (expressive) power:

- Purity. There’s a clear boundary between pure code (composed of pure functions) and impure code (input/output).

- Static typing. Types are checked at compile-time, not at run-time. This prevents a lot of run-time crashes in exchange for having to actually deal with types, which some find difficult.

- Laziness. Expressions are evaluated only when the value of the expression is needed in contrast to strict evaluation where the expression is evaluated when it is bound to the variable.

- Immutability. The data structures are immutable.

It’s one of our favourite languages, and for a reason. Haskell, when used correctly, delivers. And what it delivers is precise and effective code that is easy to maintain.

Want to go functional, but would love to spoil it with a couple of classes here and there?

Scala is the right choice for that. For some reason favoured by people that wrote Apache Spark, it can be useful for big data processing, services, and other places where functional programming is amazing.

An additional bonus of Scala is that it compiles to JVM. If that is something you need as a manager to introduce functional programming to a Java codebase, go you!

Once you start writing purely functional Scala that does not interact with JVM, there are not a lot of reasons to just switch to Haskell though as the support is much better.

If Haskell is a bit niche, OCaml is super niche with one of the main things holding it above water being local developer support in France.

But perhaps not anymore. For example, similarly to other programming languages listed, it has seen use in blockchain, particularly, Tezos. And they have their reasons.

OCaml is one of those languages that blurs the boundary between functional programming and object-oriented languages. Therefore, using OCaml over Haskell might be more intuitive for a newly functional programmer. OCaml is less obsessed with purity, and the people who write in it are a bit more practical: you might survive the attack of your fellow developers if you just try to wing it in OCaml.

Did you know that the world’s best web framework is written in a functional programming language? Productive. Reliable. Fast. Yeah.

Elixir is a functional, general-purpose programming language that runs on BEAM, the Erlang VM. It is known for its role in creating low-latency and fault-tolerant distributed systems. Furthermore, it is great at creating stuff that scales according to the needs of the network. Elixir is extremely well used at companies like WhatsApp and Netflix that handle a lot of data and need to do it fast. You can’t miss this one if you are doing something similar.

You know, I cannot end without a pitch. Functional programming is excellent for extensive systems and structures. However, not every business can devote enough resources to execute such complicated work. Anteelo understands the struggle and aims to deliver the best service possible to ensure smooth and reliable programming projects for your company.

Our developer team provides development services in different languages. We not only write code but also carry out projects from their starting ideas to their last stages. This means that we can also do research, design, and other connected services for you. Although we offer a versatile coding language scope, I have to warn that we mainly use Haskell.