If you are one of the growing numbers of companies using Big Data, this post will explain the benefits and challenges of migrating to the public cloud and why it could be the ideal environment for your operations.

Cloud-based Big Data on the rise

According to a recent survey by Oracle, 80% of companies are planning to migrate their Big Data and analytics operations to the cloud. One of the main factors behind this was the success that these companies have had when dipping their toe into Big Data analytics. Another survey of US companies discovered that over 90% of enterprises had carried out a big data initiative last year and that in 80% of cases, those projects had highly beneficial outcomes.

Most initial trials with Big Data are carried out in-house. However, many of those who find it successful want to expand their Big Data operations and see the cloud as a better solution. The reason for this is that the IaaS, PaaS and SaaS solutions offered by cloud vendors are much more cost effective than developing in-house capacity.

One of the issues with in-house, Big Data analyses is that it frequently involves the use of Hadoop. Whilst Apache’s open-source software framework has revolutionized storage and Big Data processing, in-house teams find it very challenging to use. As a result, many businesses are turning to cloud vendors who can provide Hadoop expertise as well as other data processing options.

The benefits of moving to the public cloud

One of the main reasons for migrating is that public cloud Big Data services provide clients with essential benefits. These include on-demand pricing, access to data stored anywhere, increased flexibility and agility, rapid provisioning and better management.

On top of this, the unparalleled scalability of the public cloud means it is ideal for handling Big Data workloads. Businesses can instantly have all the storage and computing resources they need and only pay for what they use. Public cloud can also provide increased security that creates a better environment for compliance.

Software as a service (SaaS) has also made public cloud Big Data migration more appealing. By the end of 2017, almost 80% of enterprises had adopted SaaS, a rise of 17% from 2016, and over half of these use multiple data sources. As the bulk of their data is stored in the cloud, it makes good business sense to analyse it there rather than go through the process of moving back to an in-house data centre.

The other benefit of the public cloud is the decreasing cost of data storage. While many companies might currently think the cost of storing Big Data over a long period is expensive compared to in-house storage, developments in technology are already bringing down the costs and this will continue to happen in the future. At the same time, you will see vast improvements in the public cloud’s ability to process that data in greater volumes and at faster speeds.

Finally, the cloud enables companies to leverage other innovative technologies, such as machine learning, artificial intelligence and serverless analytics. The pace of developments these bring means that those companies who are late adopters of using Big Data in the public cloud find themselves at a competitive disadvantage. By the time they migrate, their competitors are already eating into their market.

The challenge of moving Big Data to the public cloud

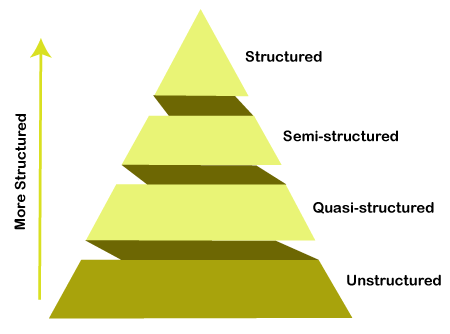

Migrating huge quantities of data to the public cloud do raise a few obstacles. Integration is one such challenge. A number of enterprises find it difficult to integrate data when it is spread across a range of different sources and others have found it challenging to integrate cloud data with that stored in-house.

Workplace attitudes also pose a barrier to migration. In a recent survey, over half of respondents claimed that internal reluctance, incoherent IT strategies and other organizational problems created significant issues in their plans to move Big Data initiatives to the public cloud.

There are technical issues to overcome too. Particularly data management, security and the above-mentioned integration.

Planning your migration

Before starting your migration, it is important to plan ahead. If you intend to fully move Big Data analyses to the public cloud, the first thing to do is to cease investment in in-house capabilities and focus on developing a strategic plan for your migration, beginning with the projects that are most critical to your business development.

Moving to the cloud also offers scope for you to move forward and improve what you already have. For this reason, don’t plan to make your cloud infrastructure a direct replica of what you have in-house. It is the ideal opportunity to create for the future and build something from the ground up that will provide even more benefits than you currently have. Migration is the chance to redesign your solutions so they can benefit from all the things the cloud has to offer: automation, AI, machine learning, etc.

Finally, you need to decide on the type of public cloud service that best fits your current and future needs. Businesses have a range of choices when it comes to cloud-based Big Data services, these include software as a service (SaaS) infrastructure as a service (IaaS) and platform as a service (PaaS); you can even get machine learning as a service (MLaaS). Which level of service you decide to opt for will depend on a range of factors, such as your existing infrastructure, compliance requirements, Big Data software and in-house expertise.

Conclusion

Migrating Big Data analytics to the public cloud offers businesses a raft of benefits: cost savings, scalability, agility, increased processing capabilities, better access to data, improved security and access to technologies such as machine learning and artificial intelligence. Whilst moving does have obstacles that need to be overcome, the advantages of being able to analyze Big Data gives companies a competitive edge right from the outset.