Recent headlines have been full of news about major healthcare mergers and acquisitions, often involving newcomers to the industry, but also creating a convergence of traditional payer, provider and pharmaceutical benefit management companies.

Here are some of the latest examples in the changing healthcare scene:

CVS Health, a large pharmaceutical benefit manager, is purchasing Aetna, a large insurer, while Cigna, another large insurer, is acquiring Express Scripts, another pharmaceutical benefit manager.

Meanwhile, tech giants Amazon and Apple took some giant steps into the healthcare fray. Amazon entered into a joint venture with Berkshire Hathaway and J.P. Morgan Chase in an effort by all three to control employer costs, and Amazon also purchased PillPack, an online pharmacy company, and expects to expand services after obtaining state licenses. Apple showed its commitment to shake up the healthcare status quo by expanding its personal health record system, partnerships with hospitals and A.C. Wellness centers – all with a goal of gaining greater influence on healthcare consumption.

The convergence moves the industry away from the traditional separation of payers (health insurance companies and self-insured employers) and providers. Typically, payers are defined as the organizations that conduct actuarial analysis and manage financial risk by collecting premiums and managing payments for services delivered. Providers, meanwhile have typically been defined as healthcare practitioners and organizations that deliver and bill for services, including inpatient, outpatient, elective and emergent.

Those narrow definitions have been shaken up in the post-Affordable Care Act (ACA) world. In the past, the focus was on fee-for-service and capitated contracts under which HMOs or managed care organizations paid a fixed amount for its members to a provider. But the ACA moved the emphasis to value-based care, pushing more financial risk onto providers and away from payers. That means insurers and providers also need to consider how they manage pre-existing conditions and use risk scoring to determine the likely needs of their patients, as their approach can make the difference between profitable success and unprofitable failure.

In this new and complex environment, mergers and acquisitions are seen as a way for both providers and payers to build up their capabilities and respond to the need to enhance patient care, improve population health and reduce costs.

For traditional healthcare incumbents, we believe this also means using a “secret” weapon non-traditional players already leverage: data analytics.

Better data and analytics life cycle management can yield the insights payers and providers need to balance their priorities and deliver value-based care.

How to balance risk and patient outcomes

But first, what do all of these changes entail, and how do they take providers and payers beyond their narrower definitions?

In the post-ACA world, providers are looking to take more financial risk as their actuarial capabilities improve. This would allow them to negotiate more effectively with payers to achieve care outcomes objectives while balancing reimbursement and risk.

Payers, meanwhile, are acquiring doctors’ offices and other providers, or combining with retail clinics and other points-of-care to combine care delivery with financial risk management. To accomplish these goals, payers need to take a more active role in managing the healthcare professionals that they employ as well as the patients who visit those practitioners. Having access to the care delivery setting also allows for greater accuracy.

Managing these activities – by both the provider and the payer – needs to go beyond just financial management. It needs to include operational excellence, using robust data analytics to communicate with people and organizations delivering care. It also requires having performance-level agreements and bidirectional communication in place to measure and monitor reasonable objectives set by both payer and provider. Indeed, collaboration and communication will be crucial to overcome tensions that are building as providers try to deliver on value-based contracts. Finding a way to integrate insights from the back-end will help to ensure both the payer and provider perspectives are understood.

Use data to your advantage

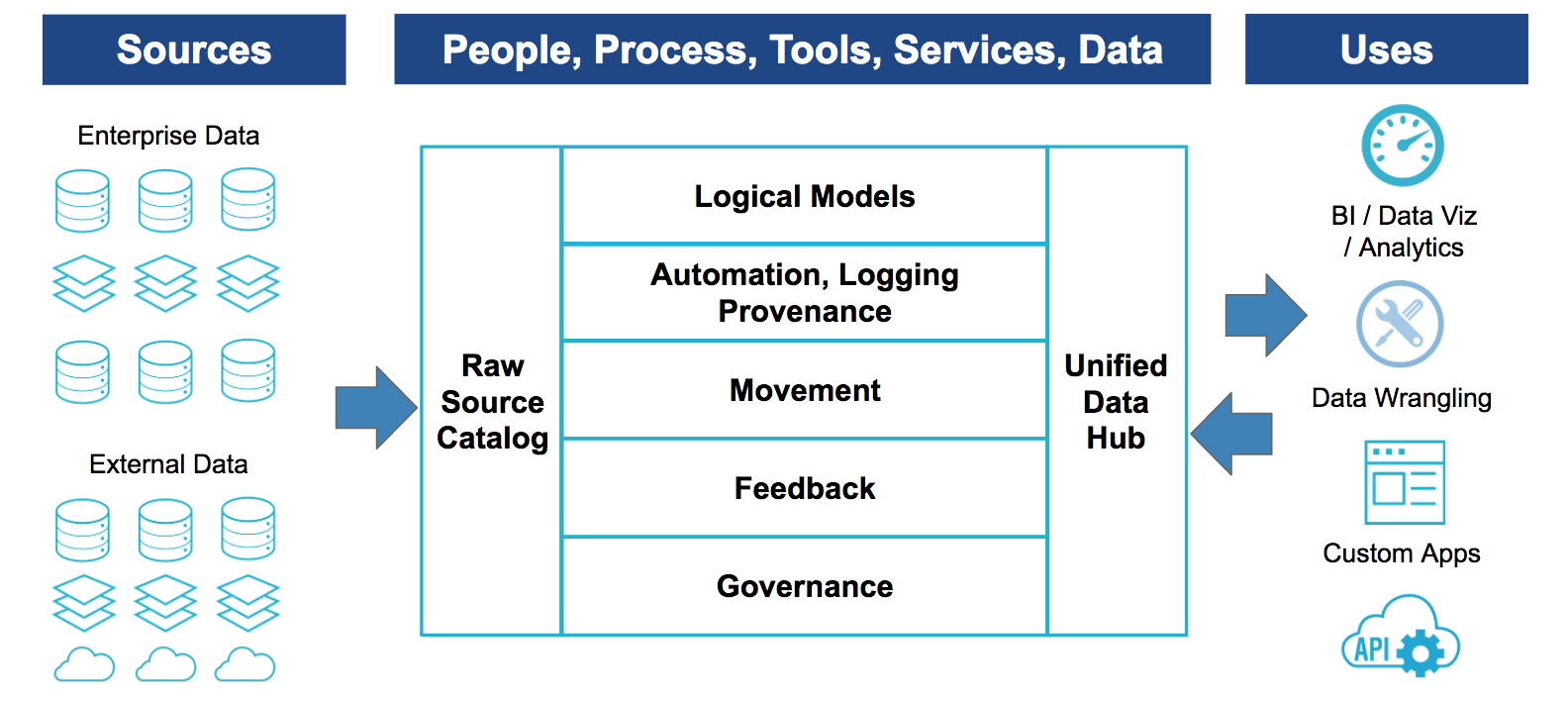

A balance between the needs of the provider and the payer – while prioritizing the needs of the patient – will require change management and deeper insights on what works, what doesn’t and how outcomes for all stakeholders can be adjusted and improved. Those insights must be based on hard data, which will require more robust data, analytics and IT infrastructure. Organizations will need to deploy data and analytics life cycle management – including input, ingestion, management, storage and data utility. Integrated workflows make it easy to collect better, well-rounded encounter data, improving how providers work and increasing provider and patient satisfaction.

That data needs to encompass all parts of the healthcare continuum, meaning patient experience as well as provider and payer data. For this to happen, payers and providers must ensure better consumer engagement by spurring patients to take charge of their own care and using the data provided by patients to improve insights. Being able to see the end-to-end experience of the patient can affect the pieces accordingly.

Brave new healthcare environment

This brings us full circle to the changing industry dynamics and the entry of non-traditional players into the healthcare arena, since the big tech players such as Amazon, Apple and Alphabet know how to leverage data analytics to gain customer insights. As healthcare incumbents build and acquire assets, they will need to match these capabilities and build on their own strengths to ensure they aren’t left behind in this brave new healthcare environment.