5 Ways Google Analytics Can Improve Your Website

Google Analytics is one of the most valuable free tools available for website owners, providing detailed data about traffic and visitors which can be used to evaluate how your content performs and attracts new visitors. In this post, we’ll look at some of the key metrics, Google Analytics provides and shows how these can be used to improve your website.

1. Use traffic data to identify under-performing channels

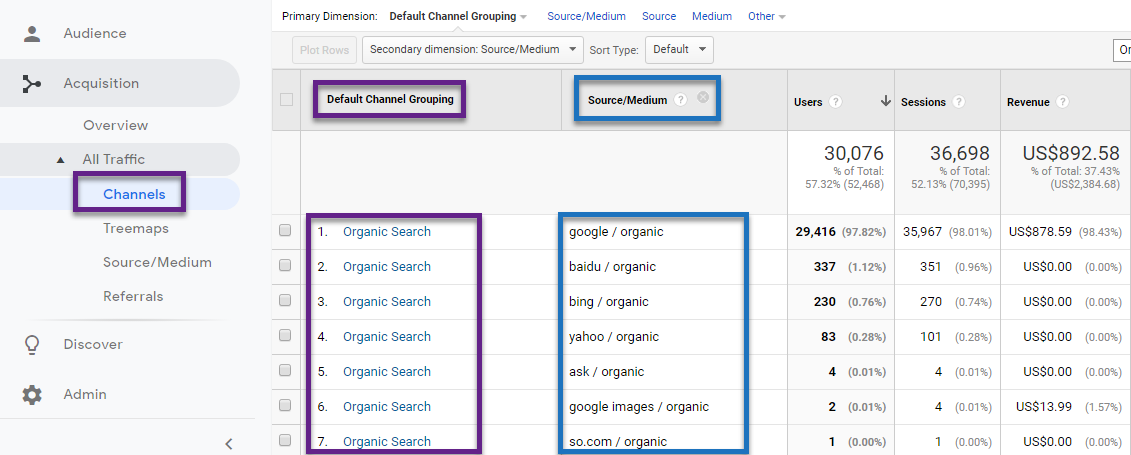

Google Analytics’ acquisition data shows you how much traffic you have acquired from each of the different channels. These are organic traffic, e.g. visitors who have found you from search engines; direct traffic, e.g. visitors who typed your URL into their browser; referral traffic, those who have clicked on links on other sites; and social traffic, i.e., those who have come from social media platforms.

It is also possible to analyse these sources more deeply, for example, you can check your social media data to see whether you got more traffic from Facebook, Twitter or Instagram, or see how much organic traffic comes from Google, Bing and Yahoo. You can also look at the medium that visitors use to find your site. This can tell you about the performance of your advertising campaigns by identifying the ads that send you the most traffic.

With all this valuable information at your fingertips, it makes it much easier to understand where your websites’ strengths and weaknesses lie. You might, for example, find that you perform well on search engines but that you need to put more effort into increasing your social media traffic.

2. Find which pages get the most visitors

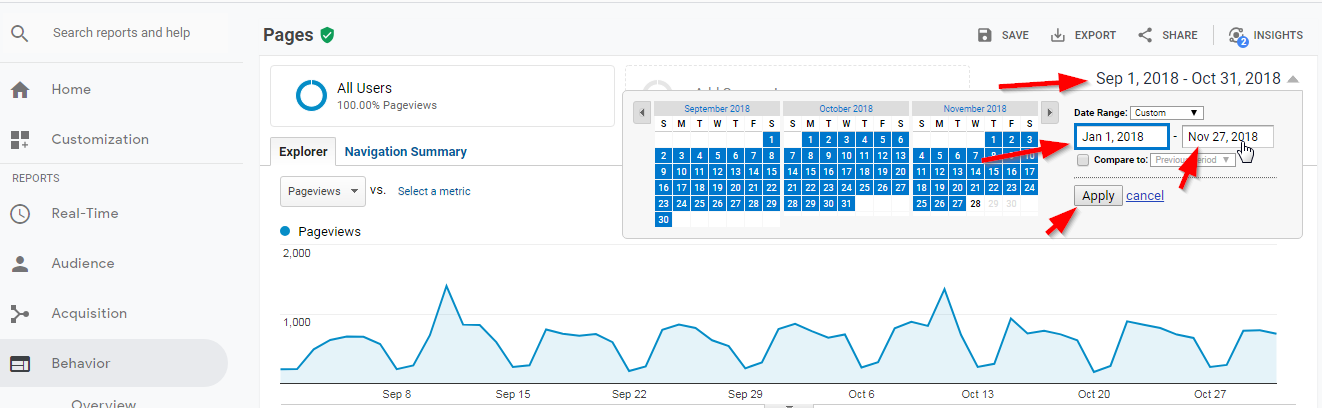

Equally important to understanding where your visitors come from is knowing what pages they go to on your website. And it is possible, using the behavior report, to see this in detail.

By looking at the Site Content > All Pages data, you’ll get a ranked list showing which pages get the most visits over your chosen timescale. You can also drill down further by using the ‘secondary dimension’ tool to discover where the visitors for each page comes from.

The importance of this data is that it enables you to get a better understanding of your website’s content. For example, if pages are not getting much organic traffic it hints that you might need to look at your SEO or rewrite the content to make it more useful to your visitors. Looking at your most successful content and figuring out why it attracts traffic well, can help you make improvements across your site.

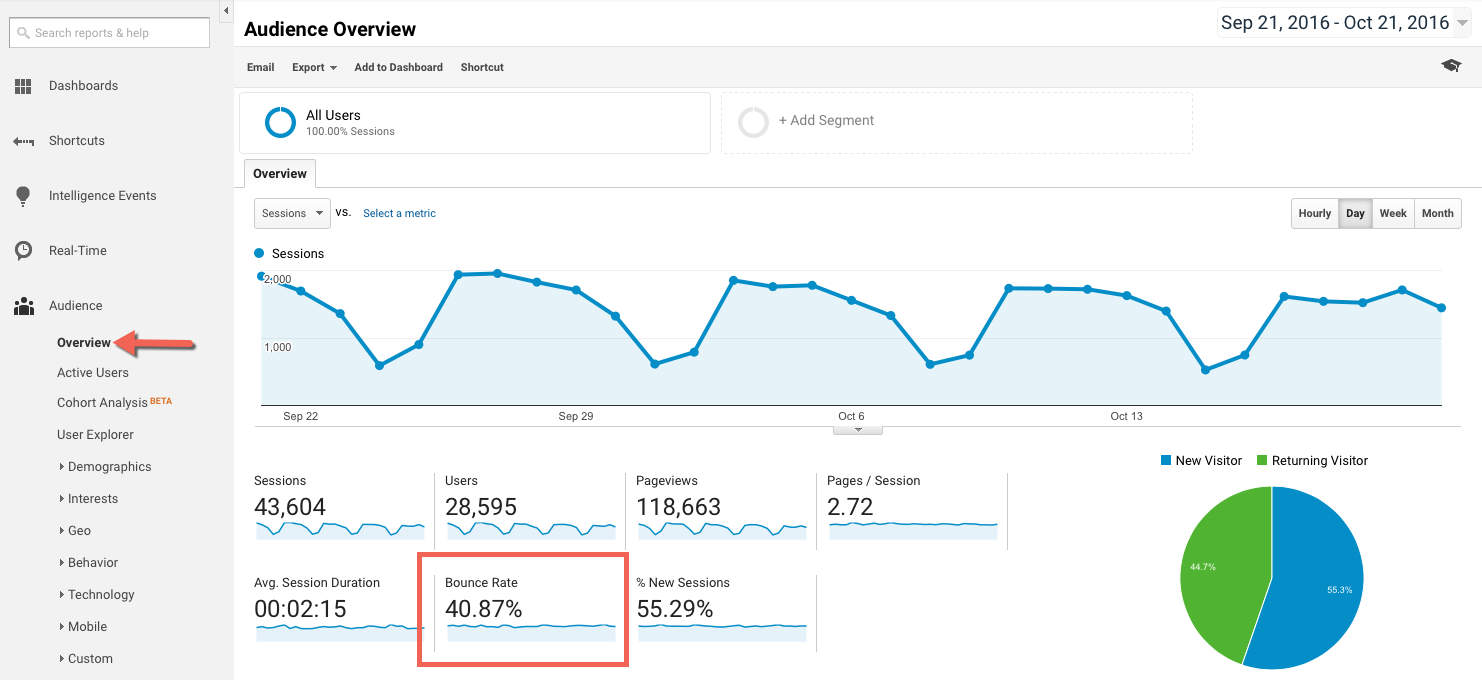

3. How low is your bounce rate?

The bounce rate is the term used to describe the percentage of visitors who only visit one page before leaving. Whilst no web page will ever get a 0% bounce rate, some types of pages, such as product pages, are more likely to get high bounce rates. If someone wants something specific, they’ll quickly scoot off back to Google if they don’t find what they’re looking for.

High bounce rates, however, are a cause for concern, especially on your homepage or key landing pages. If this is the case, it’s an indication that you may need to make improvements to the content or the design in order to get visitors to move to other parts of your website.

It could be that your content is not relevant, that the page isn’t attractive or easy to read, there may annoying popups, or the page may even load too slowly for the user to hang around. Whilst Google Analytics cannot tell you what the problem is, it’s very good at showing that there is a problem.

4. Find issues from analyzing session data

Two other great metrics that Google Analytics provides you with are the average number of pages per session and the average time on page.

The pages per session data show you how many pages the average user visits when they land on your website. Depending on the nature of your site, you’ll have an idea of how many pages you would like each visitor to see. If you’ve an eCommerce site or blog, for example, you’ll want a visitor to visit lots of pages, if your site has only a couple of service pages then, obviously, you’ll be looking at a smaller figure.

The importance of this data is that it will tell you if you are meeting your optimum figure. If you sell a hundred different types of men’s shoes and the average visitor only looks at two or three pages, then that could indicate a range of issues: poor product selection or availability, high prices, lack of detailed product information, etc. Further drilling down may point to a more precise answer.

The time on page data (found in the behaviour section) tells you how much time the average visitor stays on each page. This can be very useful in understanding how well visitors engage with your content and if they actually read all the pages. If you know it takes three or four minutes to read the page and that the average visitor only spends 30 seconds, then it is obvious that there is something stopping your content from getting read. It could indicate boring or badly written content, information being hard to find or something off-putting being mentioned partway through.

5. Use behaviour flow to discover conversion barriers

If you run an online business, there will be a sales pathway that you want your customers to take as they go through your website, for example, homepage > product category page > individual product page > shopping basket > order details > payment page.

Using the Google Analytics’ behaviour flow tool, you will be able to see how visitors actually move through your site: where they land, what pages they visit as they move and where they exit the site. You’ll also see what proportions move from A to B to C, etc., so that you’ll understand the drop-off rates at each stage of the buying process.

Although it is natural to see a drop-off of visitor numbers as they head towards the payment page, one of the biggest benefits of this tool is that it clearly shows where the biggest drop-off points are. Understanding where these are can help you eradicate barriers to sales or other goals. For example, if you have a large drop-off between the order details and payment page, it could be that you have an issue with the checkout process. Perhaps you are asking for too much information or your delivery pricing is not clear.

Although it is up to you to determine the cause, the data will tell you if there is an obstacle at that point in the process that prevents users from completing the sale. Removing that obstacle is a clear way to improve your conversion rates.

Conclusion

Google Analytics is a fantastic tool for helping businesses improve their websites. It’s not designed to give all the answers, but it does provide an insight into where traffic comes from and how visitors behave when on-site. From this, you can understand what is working well and learn which areas need to be improved upon.