Enterprise clients have looked to automate IT support for several years. With millions of employees across the globe now working from home, support needs have increased dramatically, with many unprepared enterprises suffering from long service desk wait times and unhappy employees. Many companies may have already been on a gradual pace to exploit digital solutions and enhance service desk operations, but automating IT support is now a greater priority. Companies can’t afford downtime or the lost productivity caused by inefficient support systems, especially when remote workers need more support now than ever before. Digital technologies offer companies innovative and cost-effective ways to manage increased support loads in the immediate term, and free up valuable time and resources over the long-term. The latter benefit is critical, as enterprises increasingly look to their support systems to resolve more sophisticated and complex issues. Instead of derailing them, new automated support systems can empower workers by freeing them up to focus more on high-value work.

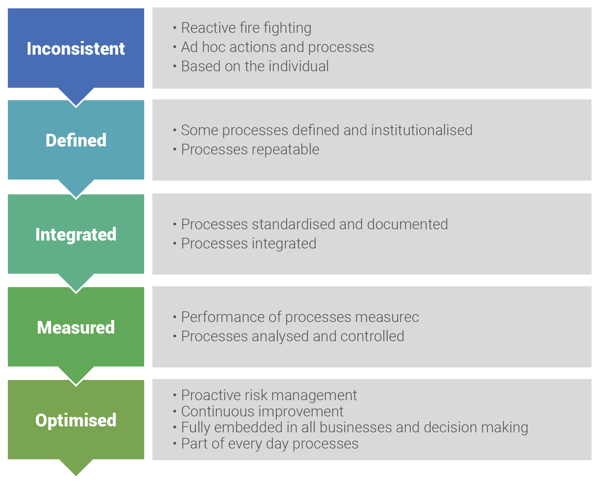

Businesses can start their journey toward digital support by using chatbots to manage common support tasks such as resetting passwords, answering ‘how to’ questions, and processing new laptop requests. Once basic support functions are under digital management, companies can then transition to layering in technologies like machine learning, artificial intelligence and analytics among others.

An IT support automation ecosystem built on these capabilities can enable even greater positive outcomes – like intelligently (and invisibly) discovering and resolving issues before they have an opportunity to disrupt employees. In one recent example, DXC deployed digital support agents to help manage a spike of questions coming in from remote workers. The digital agents seamlessly handled a 20% spike in volume, eliminated wait times, and drove positive employee experiences.

Innovative IT support

IT support automation helps companies become more proactive in serving their employees better with more innovative support experiences. Here are three examples:

Remote access

In a remote workforce, employees will undoubtedly face issues with new tools they need to use or with connections to the corporate network. An automated system that notifies employees via email or text about detected problems and personalized instructions on how to fix is a new way to care for the remote worker. If an employee still has trouble, an on-demand virtual chat or voice assistant can easily walk them through the fix or, better yet, execute it for them.

Proactive response

The ability to proactively monitor and resolve the employee’s endpoint — to ensure security compliance, set up effective collaboration, and maintain high performance levels for key applications and networking – has emerged as a significant driver of success when managing the remote workplace.

For example, with more reliance on home internet as the path into private work networks, there’s greater opportunity for bad actors to attack. A proactive support system can continuously monitor for threat events and automatically ensure all employee endpoints are security compliant.

Leveraging proactive analytics capabilities, IT support can set up monitoring parameters to match their enterprise needs, identify when events are triggered, and take action to resolve. This digital support system could then execute automated fixes or send friendly messages to the employee with instructions on how to fix an issue. These things can go a long way toward eliminating support disruptions and leave the employee with a sense of being cared for – the best kind of support.

More value beyond IT

Companies are also having employees leverage automated assistance outside of IT support functions. These capabilities could be leveraged in HR, for example, to help employees correctly and promptly fill out time sheets or remind them to select a beneficiary for corporate benefits after a major life event like getting married or having a baby.

Remote support can also help organizations automate business tasks. This could include checking on sales performance, getting recent market research reports sent to any device or booking meetings through a voice-controlled device at home.

More engaged employees With the power to provide amazing experiences, automated IT support can drive new levels of employee productivity and engagement, which are outcomes any enterprise should embrace.