To help you manage data effectively, here are the five key elements of cloud data management.

The collection of data is essential for today’s businesses, it is what enables them to be innovative and helps them stay agile. There is an increasing amount of data being used, too: customer data, product data, competitor data, employee records, system logs, supply chain data, bespoke applications and so forth. And all this data needs to be easily accessible while, at the same time, remaining highly secure.

Problems with data

The growing volume of data being collected and its importance for business growth can cause problems for enterprises. Companies are now becoming data hoarders, storing every piece of information they can glean with the hope that one day it will have value for them. The nature of that data is also becoming increasingly complex as companies add new systems, software and devices.

At the same time, it is important to recognize the need to control how data is used by employees to prevent them from unwittingly deleting that which is not essential for them but which is critical for the business – or to stop those with a grudge from wiping data deliberately.

To help you manage data effectively so that you can get the right balance between security and ease of access, here are the five key elements of cloud data management.

1. Managing unused data

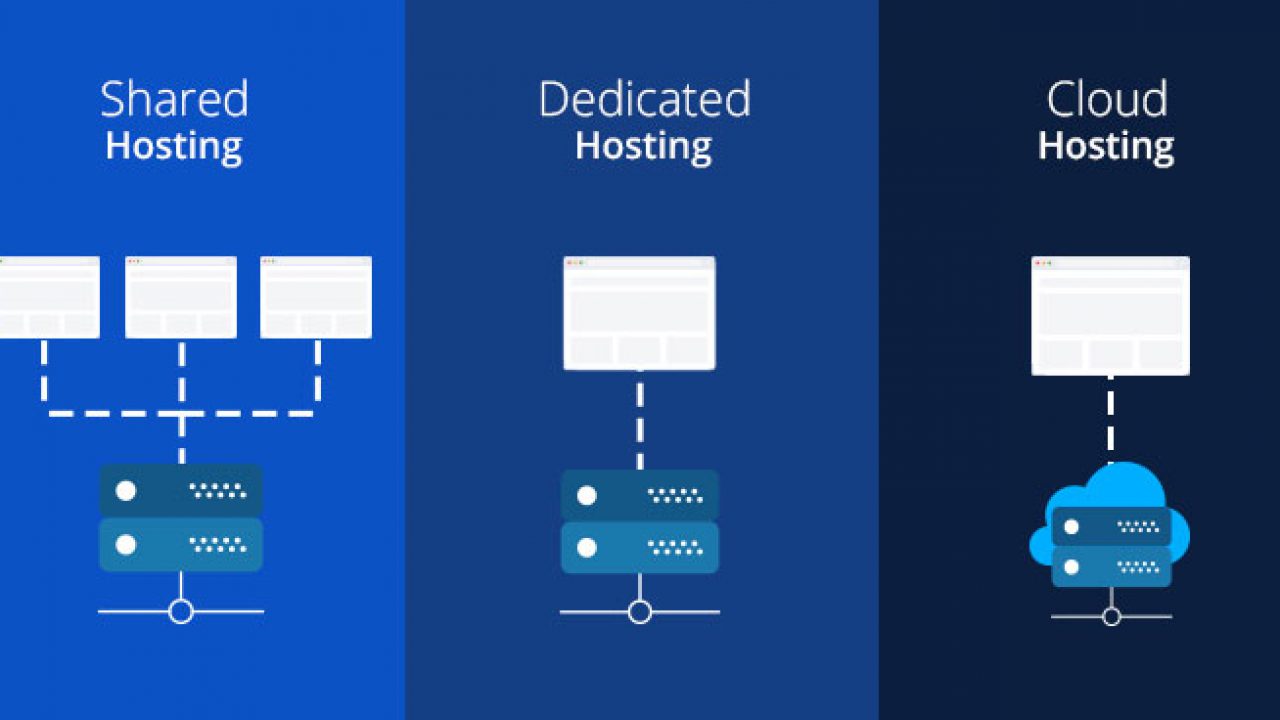

A lot of the data that a company collects won’t be needed all the time. For most of its existence is will be held in storage doing nothing. However, for compliance and other business purposes, it will need protecting. For this reason, it should be behind a firewall and, importantly, be encrypted.

Encrypting unused data ensures that if it is stolen, the perpetrators, or anyone they sell it too, won’t be able to decipher it. This helps protect you not only against hackers but also from employees who make blunders or those with more devious objectives. Often, the weak spots in any system are the devices used by employees. Hackers use these to worm their way into the more valuable part of a company’s network. Encryption helps prevent this from happening to stored data – especially when there is limited access to the decryption key.

2. Controlling access to data

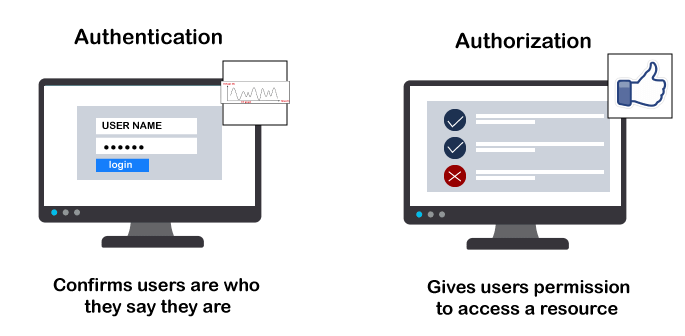

Whilst it is crucial that staff are able to access all the data they need to carry out their roles, it is also vital that you have control over how that data is accessed. The starting point here should be to determine precisely who needs access to what data to carry out their work. From there, you can implement individual access rights that prevent unauthorised users from accessing data they are not entitled to see.

Using logical access control will ensure that anyone trying to access data will be prevented from doing so unless their ID is authenticated. At the same time, such systems will log every data transaction, enabling you to trace issues to their source should problems arise. Indeed, such systems can even check the security of the devices being used to access the data to make sure they are free from malware. With the use of AI, it is now even possible to analyse the behaviour of users and their devices to identify if suspicious activity is taking place.

3. Protecting data during transfer

Another weakness is data in transit. Just as websites need to use SSL to protect payment details during online purchases, businesses need to implement a secure, encrypted and authenticated channel between a user’s device and the data that is being requested. It is important here to make sure that the data remains encrypted while it is being transferred so that if it is intercepted on route, it cannot be read. A key factor in protecting data in transit is your choice of firewall. At the same time, you should also consider using a VPN.

4. Checking data as it arrives

One often overlooked area of security is incoming data. Businesses need to know that when any data arrives, it is what it purports to be. You need to ensure that it is authentic and that it hasn’t been maliciously modified on route. Putting measures in place to guarantee data integrity is important to negate the risk of infection or data breach. This includes email, where phishing attacks are a major problem, fooling employees into thinking they are the genuine article so that when they are opened or links are clicked on, the company’s security is compromised.

5. Secure backups

In the event of a disaster, a data backup can be the only thing which will get your company up and running quickly enough to stop it going out of business. Remote, secure backups are critical for disaster recovery operations and should be a key element of any business’ data management strategy.

To protect yourself more thoroughly in the cloud, it is best not to store your backup data in the same place as you store the active data. If a hacker gets access to one, they’ll also have access to the other. Keeping them in separate accounts creates another layer of security. To do this, simply create a separate backup account with your provider. Ensure that backup schedules are made as frequently as is needed.

Conclusion

With businesses becoming increasingly reliant on data to carry out their day to day operations and build for long-term success, it is crucial that data is managed effectively. In this post, we‘ve looked at the five key areas for data management in the cloud: storing unused data, controlling access, protecting data during transfer, checking incoming data and creating backups. Hopefully, the points we’ve raised will help you manage your cloud data more effectively and securely.

As

As