Irrespective of which industry you look at, you will find entrepreneurs hustling to kickstart their digital transformation efforts which have been lying in the backdrop since several business years. While a considerably easy move when you have to alter your digital offering according to the customers’ needs, things become a little difficult when you start planning digital transformation for your business. A difficulty that mobile applications can solve.

There are two prime elements which businesses need to focus on when planning to digitally transform their workflow and workforce: adaptability and portability. And by bringing their processes and communications on mobile apps, they are able to hit both the targets in one go.

Here are some statistics looking into why enterprises need to count mobile application in, in their digital transformation strategy –

Although the above graph should be a reason enough to take mobile apps seriously, there are some other numbers as well.

- 57% of the digital media use comes in through apps.

- On an average, any smartphone user has over 80 apps out of which they use 40 apps every month.

- 21% of millennials visit a mobile application 50+ times everyday.

While the statistics establish the rising growth of mobile apps, what we intend to cover in the article is the pivotal role mobile applications play in digital business transformation. To understand it from the entirety, we will first have to look into what is digital transformation and what it entails.

What is digital transformation?

Digital transformation means using digital technologies for changing how a business operates, fundamentally. It offers businesses a chance to reimagine how they engage with the customers, how they create new processes, and ultimately how they deliver value.

The true capabilities of introducing digital transformation in business lies in making a company more agile, lean, and competitive. The end of the long term commitment results in several benefits.

Benefits of digital transformation for a business

- Greater efficiency – leveraging new technologies for automating processes leads to greater efficiency, which in turn, lowers the workforce requirements and cost-saving processes.

- Better decision making – through digitalized information, businesses can tap in the insights present in data. This, in turn, helps management make informed decisions on the basis of quality intelligence.

- Greater reach – digitalization opens you to omni-channel presence which enables your customers to access your services or products from across the globe.

- Intuitive customer experience – digital transformation gives you the access to use data for understanding your customers better enabling you to know their needs and delivering them a personalized experience.

Merging mobile app capabilities with digital transformation outcomes

The role of mobile applications can be introduced in all the areas which are also often the key areas of digital transformation challenges that an enterprise faces.

- Technology integration

- Better customer experience

- Improved operations

- Changed organizational structure

When you partner with a digital transformation consulting firm that holds an expertise in enterprise app development, they work around all these above-mentioned areas in addition to shaping their digital transformation roadmap around technology, process, and people.

In addition to a seamless integration with the digital transformation strategy of an enterprise, there are a number of reasons behind the growing need to adopt digital transformation across sectors. Ones that encompasses and expands beyond the reasons to invest in enterprise mobility solutions.

The multitude of reasons, cumulatively, makes mobility a prime solution offering of the US digital transformation market.

How are mobile apps playing a role in advancing businesses’ internal digital transformation efforts?

1. By utilizing AI in mobile apps

The benefits of using AI for bettering customer experience is uncontested. Through digital transformation, businesses have started using AI for developing intuitive mobile apps using technologies like natural language processing, natural language generation, speech recognition technology, chatbots, and biometrics.

AI doesn’t just help with automation of processes and with predictive, preventative analysis but also with serving customers in a way they want to be served.

2. An onset of IoT mobile apps

The time when IoT was used for displaying products and sharing information is sliding by. The use cases of mobile apps in the IoT domain is constantly expanding.

Enterprises are using IoT mobile apps to operate smart equipment in their offices and making the supply chains efficient, transparent. While still a new entrant in the enterprise sector, IoT mobile apps are finding ways to strengthen their position in the business world.

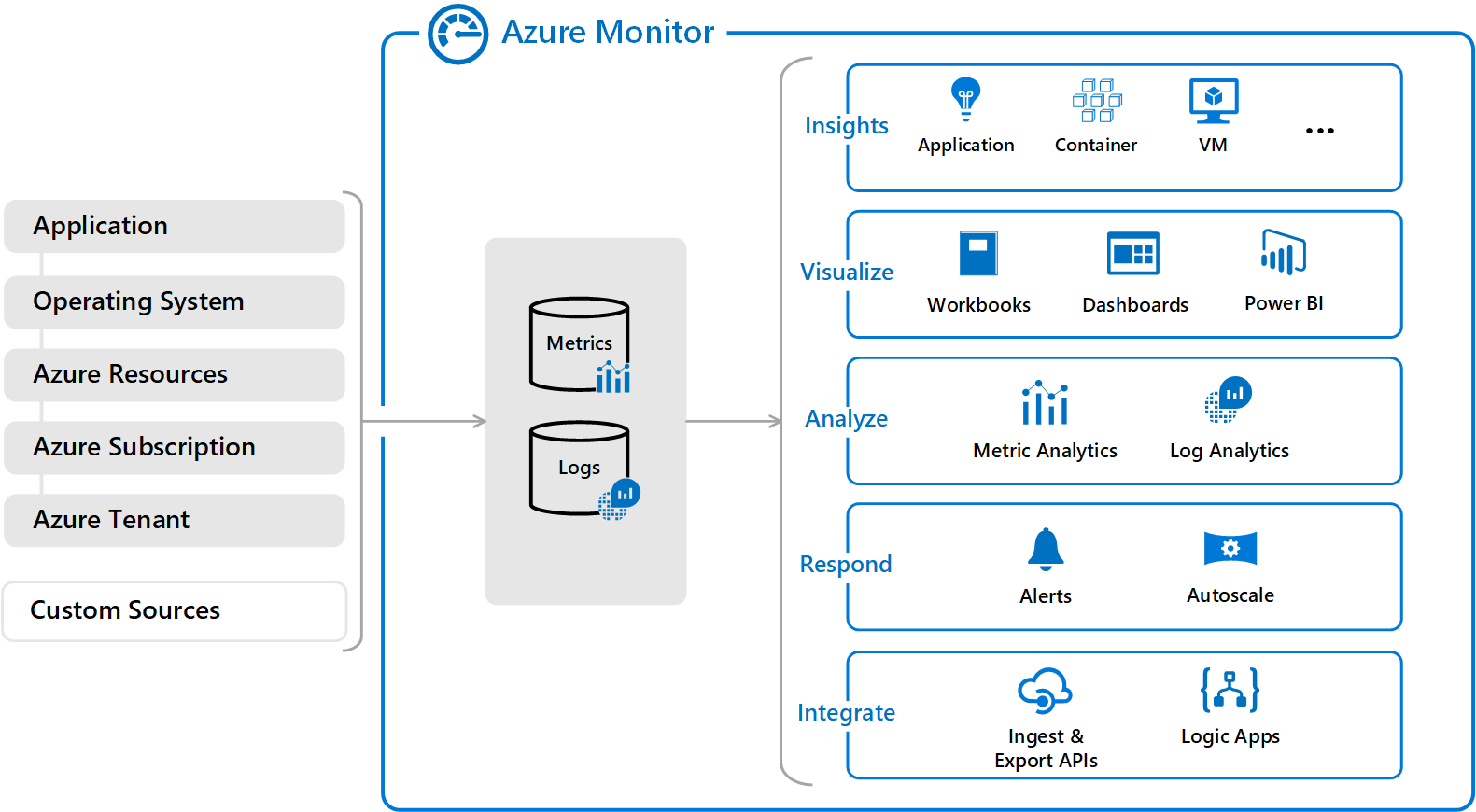

3. Making informed decisions via real-time analytics

In the current business world, access to real-time analytics can give you a strong competitive advantage. Mobile applications are a great way for businesses to collect users’ data and engage with them through marketing transformation messages designed around the analytics based on their app journey.

You can use real-time analytics to know how your teams are performing, analyze their productivity, and get a first-hand view into the problems they are facing in performing a task and how it’s impacting the overall business value.

4. Greater portability

Portability in an enterprise ecosystem enables employees to work as per their convenience. While it shows less impact in the short term, in the long run, it plays a huge role in how productive a team is.

By giving employees the space to work as per the time and location of their choice, you give them the space to boost their creativity fuel and in turn productivity. One of the results of using software that enabled our employees to work according to their terms and conveniences for us was greater business expansion ideas and an increase in overall productivity of the workforce.

Tips to consider when making mobile apps a part of the digital transformation strategy

![Part 2]: Digital Transformation in Manufacturing: Defining the Digital Transformation Strategy and The Challenges Ahead | by PlumLogix (Salesforce Partner) | PlumLogix | Medium](https://miro.medium.com/max/718/1*9tUukbhz-A85Omxv0NbZFg.jpeg)

If at this stage, you are convinced that mobile applications are a key part of digital transformation efforts, here are some tips that can help your strategies for increasing the ROI on your enterprise app –

Adopt mobile-first approach – the key factor that separates enterprise apps that are winning is how they don’t treat apps as the extension of their websites. Their software development process is strictly mobile-only. This in turn shapes their entire design, development, and testing processes.

Identifying the scope of mobility – the next tip that digital transformation consulting firms would give you is analyzing the operations and workflows for understanding which teams, departments, or functions would benefit from mobility the most. You should not start reinventing a process which works okay, you should look for areas which can be streamlined, automated, or valued through mobility.

Outsourcing digital transformation efforts – when we were preparing An Entrepreneur’s Guide on Outsourcing Digital Transformation article, we looked into several benefits of outsourcing digitalization to a digital transformation strategy consulting agency. But the prime benefit revolved around saving businesses’ efforts and time which goes into solving challenges like – absence of digital skillset, limitations of the agile transformation process, or the inability to let go of the legacy systems.