Technology as an enabler for innovation and process improvement has become the catchword for most companies. Whether it’s artificial intelligence and machine learning, gaining insights from data through better analytics capabilities, or the ability to transfer data and knowledge to the cloud, life sciences companies are looking to achieve greater efficiencies and business effectiveness.

Indeed, that was the theme of my presentation at the AWS re:Invent conference: the ability to innovate faster to bring new therapies to market, and how this is enabled by an as-a-service digital platform. For example, one company that had an increase in global activity needed help to accommodate the growth without compromising its operating standards. Rapid migration to an as-a-service digital platform led to a 23 percent reduction in its on-premises system.

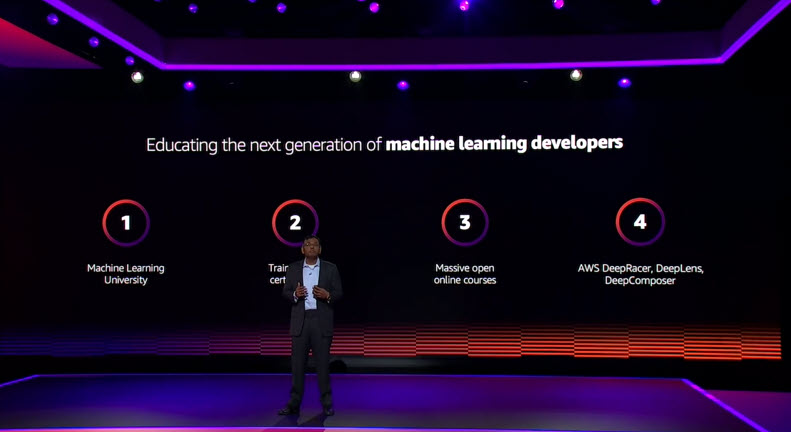

This was my first re:Invent, and it was a real eye opener to attend such a large conference. The week-long AWS re:Invent conference, which took place in November 2018, brought together nearly 55,000 people in several venues in Las Vegas to share the latest developments, trends, and experiences of Amazon Web Services (AWS), its partners and clients.

The conference is intended to be educational, giving attendees insights into technology breakthroughs and developments, and how these are being put into use. Many different industries take part, including life sciences and healthcare, which is where my expertise lies.

This slickly organized, high-energy conference offered a massive amount of information shared across numerous sessions, but with a number of overarching themes. These included artificial intelligence, machine learning and analytics; serverless environments; and security, to mention just a few. The main objective of the meeting was to help companies get the right tool for the job and to highlight several new features.

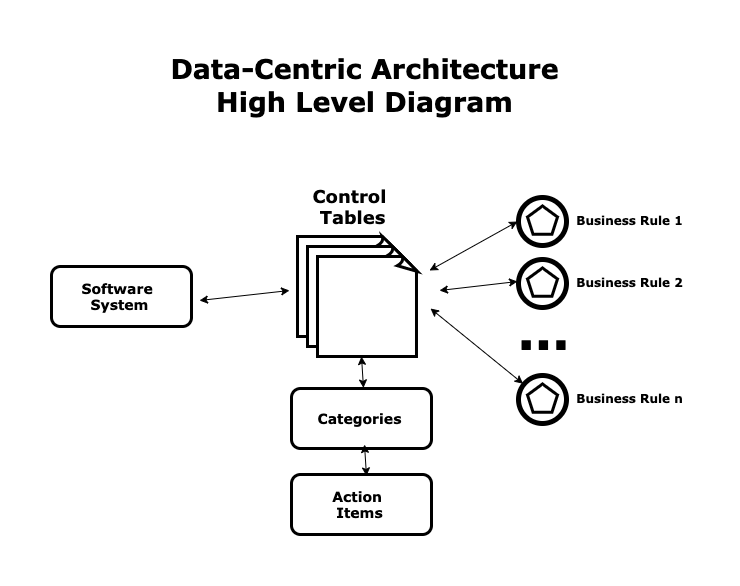

During the week, AWS also rolled out new functionalities designed to help organizations manage their technology, information and businesses more seamlessly in an increasingly data-rich world. For the life sciences and healthcare industry — providers, payers and life sciences companies — a priority is being able to gain insights based on actual data so as to make decisions quickly.

That has been difficult to do in the past because data has existed in silos across the organization. But when you start to connect all the data, it’s clear that a massive amount of knowledge can be leveraged. And that’s critical in an age where precision medicine and specialist drugs have replaced blockbusters.

A growing number of life sciences companies recognize that to connect all this data — across the organization, with partner, and with clients — they need to move to the cloud. As such, cloud, and in particular major services such as AWS, are becoming more mainstream. There’s a growing need for platforms that allow companies to move to cloud services efficiently and effectively without disrupting the business, but at the same time make use of the deeper functionality a cloud service can provide.

Putting tools in the hands of users

One such functionality that AWS launched this year is Amazon Textract, which automatically extracts text and data from documents and forms. Companies can use that information in a variety of ways, such as doing smart searches or maintaining compliance in document archives. Because many documents have data in them that can’t easily be extracted without manual intervention, many companies don’t bother, given the massive amount of work that would involve. Amazon Textract goes beyond simple optical character recognition (OCR) to also identify the contents of fields in forms and information stored in tables.

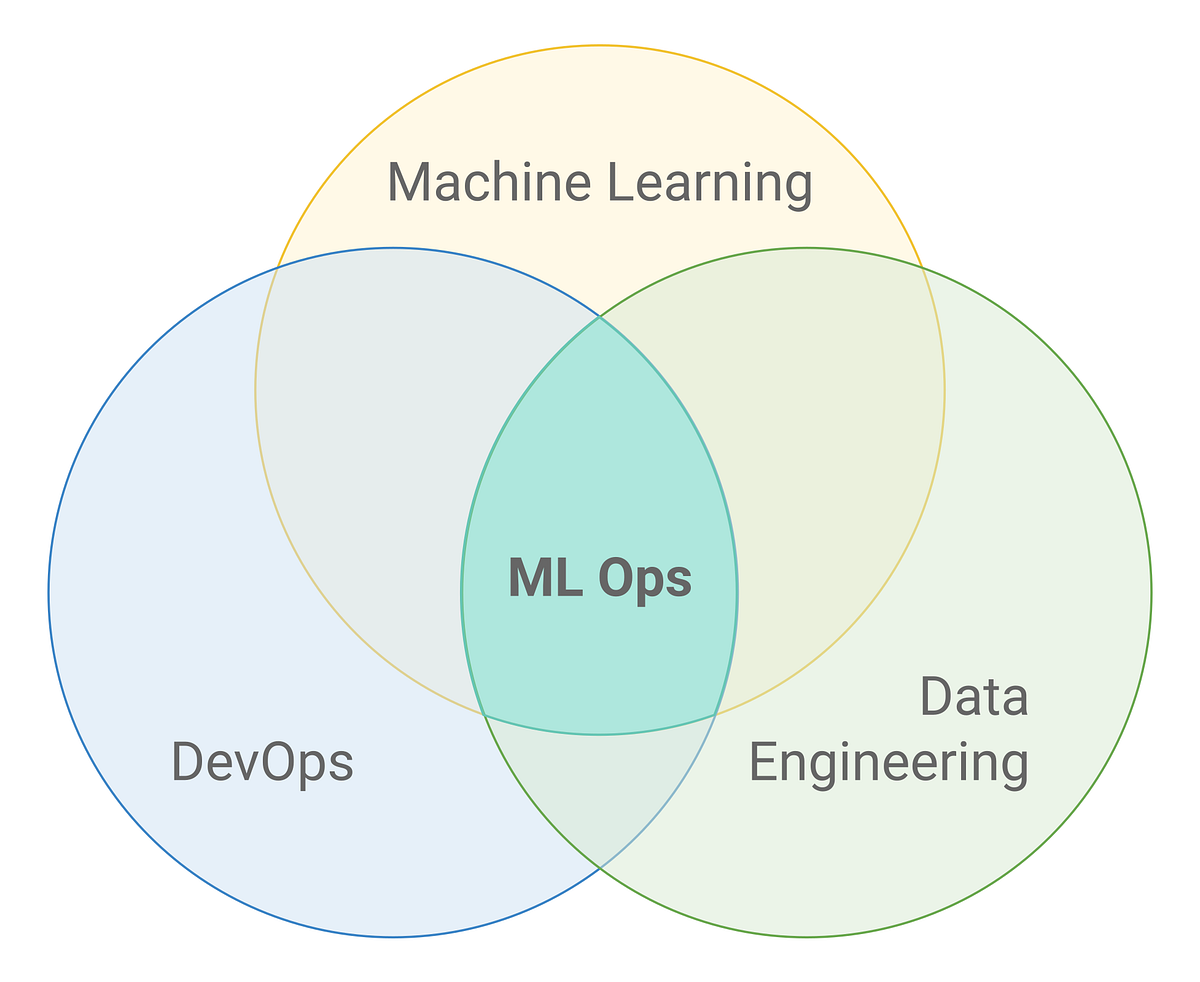

Another key capability with advanced cloud platforms is the ability to carry out advanced analytics using machine learning. While many large pharma companies have probably been doing this for a while, the resources needed to invest in that level of analytics has been beyond the scope of most smaller companies. However, leveraging an observational platform and using AWS to provide that as a service puts these capabilities within the reach of life sciences companies of all sizes.

Having access to large amounts of data and advanced analytics enabled by machine learning allows companies to gain better insights across a wide network. For example, sponsors working with multiple contract research organizations want a single view of the performance at the various sites and by the different contract research organizations (CRO). At the moment, that can be disjointed, but by leveraging a portal through an observational platform, it’s possible to see how sites and CROs are performing: Are they hitting the cohort requirements set? Are they on track to meet objectives? Or, is there an issue that needs to be managed?

Security was another important theme at the conference and one that raised many questions. Most companies know theoretically that cloud is secure, but they’re less certain whether what they have in place gives them the right level of security for their business. That can differ depending on what you put in the cloud. In life sciences, if you are putting research and development systems into the cloud, it’s vital that your IT is secure. But with the right combination of cloud capabilities and security functionality, companies can get a more secure site there than they would on-premises.

The conference highlighted multiple new functions and services that help enterprises gain better value from moving to the cloud. These include AWS Control Tower, which allows you to automate the setup of a well-architected, multi-account AWS environment across an organization. Storage was also on the agenda, with discussions about getting the right options for the business. Historically, companies bought storage and kept it on-site. But these storage solutions are expensive to replace, and it’s questionable whether they are the best way forward for companies. During the re:Invent conference, AWS launched its new Glacier Deep Dive storage facility, which allows companies to store seldom-used data much more cost effectively than legacy tape systems, at just $1.01/TB per month. Consider the large amount of historical data that a legacy product will have. In all likelihood, that data won’t be needed very often, but for companies selling or acquiring a product or company, it may be important to have access to that data.

One of the interesting things I took from the week away, apart from a Fitbit that nearly exploded with the number of steps I took in a day, was how the focus on cloud has shifted. Now the discussion has turned to: “How do I get more from the cloud, and who can help me get there faster?” rather than: “Is the cloud the right thing for my business?” Conversations held when standing in queues waiting to get into events or onto shuttle buses were largely about what each organization is doing and what the next step in its digital journey would be. This was echoed in the Anteelo booth, where many people wanted more information on how to accelerate their journey. One of the greatest concerns was the lack of internal expertise many companies have, which is why having a partner allows them to get real value and innovation into the business faster.

![8 Best Web Hosting Services for Developers [2021 UPDATE]](https://dt2sdf0db8zob.cloudfront.net/wp-content/uploads/2020/03/8-Best-Web-Hosting-Services-for-Developers.jpg)