The scale of big data, data deluge, 4Vs of data, and all that’s in between… We’ve all heard so many words adjectivized to “Data”. And the many reports and literature have taken the vocabulary and interpretation of data to a whole new level. As a result, the marketplace is split into exaggerators, implementers, and disruptors. Which one are you?

Picture this! A telecom giant decides to invest in opening 200 physical stores in 2017. How do they go about solving this problem? How do they decide the most optimal location? Which neighbourhood will garner maximum footfall and conversion?

And then there is a leading CPG player trying to figure out where they should deploy their ice cream trikes. Now mind you, we are talking impulse purchase of perishable goods. How do they decide the number of trikes that must be deployed and where, what are the flavours that will work best in each region?

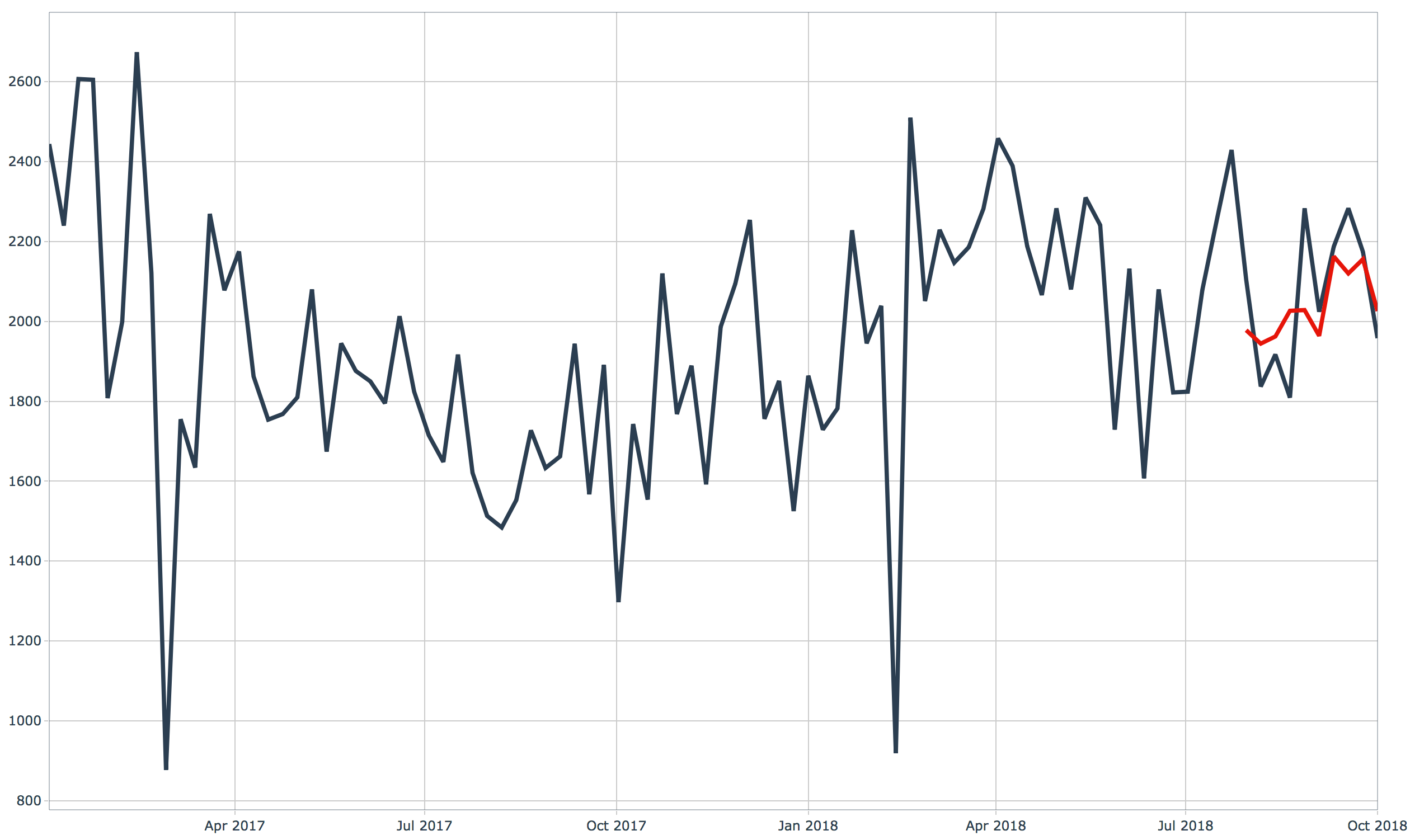

In the two examples, if the enterprises were to make decisions based on the analytics data available to them (read owned data), they would make the same mistakes day in and day out – of using past analytics data to make present decisions and future investments. The effect of it stares at you in the face; your view of true market potentials remains skewed, your understanding of customer sentiments is obsolete, and your ROI will seldom go beyond your baseline estimates. And then you are vulnerable to competition. Calculated risks become too calculated to game change.

Disruption in current times posits enterprises to undergo a paradigm shift; from owning data to seeking it. This transition requires a conscious set-up:

Power of unconstrained thinking

As adults, we are usually too constrained by what we know. We have our jitters when it comes to stepping out of our comfort zones – preventing us from venturing into the wild. The real learning though – in life, analytics or any other field for that matter – happens in the wild. To capitalize on this avenue, individuals and enterprises need to cultivate an almost child-like, inhibition-free culture of ‘unconstrained thinking’.

Each time we are confronted with unconventional business problems, pause and ask yourself: If I had unconstrained access to all the data in the world, how would my solution design change; What data (imagined or real) would I require to execute the new design?

Power of approximate reality

There is a lot we don’t know and will never know with 100% accuracy. However, this has never stopped the doers from disrupting the world. Unconstrained thinking needs to meet approximate reality to bear tangible outcomes.

Question to ask here would be – What are the nearest available approximations of all the data streams I dreamt off in my unconstrained ideation?

You will be amazed at the outcome. For example, the use of Yelp to identify the hyperlocal affluence of catchment population (resident as well as moving population), estimating the footfall in your competitor stores by analysing data captured from several thousand feet in the air.

This is the power of combining unconstrained thinking and approximate reality. The possibilities are limitless.

Filter to differentiate signal from noise – Data Triangulation

Remember, you are no longer as smart as the data you own, rather the data you earn and seek. But at a time when analytics data is in abundance and streaming, the bigger decision to make while seeking data is identifying “data of relevance”. An ability to filter signals from noise will be critical here. In the absence of on-ground validation, Triangulation is the way to go.

The Data ‘purists’ among us would debate this approach of triangulation. But welcome to the world of data you don’t own. Here, some conventions will need to be broken and mindsets need to be shifted. We at Anteelo have found data triangulation to be one of the most reliable ways to validate the veracity of your unfamiliar and un-vouched data sources.

Ability to tame the wild data

Unfortunately, old wine in a new bottle will not taste too good. When you explore data in the wild – beyond the enterprise firewalls – conventional wisdom and experience will not suffice. Your data scientist teams need to be endowed with unique capabilities and technological know-how to harness the power of data from unconventional sources. In the two examples mentioned above – of the telecom giant and CPG player – our data scientist team capitalized on the freely available hyperlocal data to conjure up a great solution for location optimization; from the data residing in Google maps, Yelp, and satellites.

Having worked with multiple clients, across industries, we have come to realize the power of this approach – of owned and seeking data; with no compromise on data integrity, security, and governance. After all, game changer and disruptors are seldom followers; rather they pave their own path and chose to find the needle in the haystack, as well!